Over the last few years, the digital world has been engulfed in data. Top Big data technologies have grabbed countless headlines, proving to be the swiss army knife of the modern digital era. The year 2022 also awaits significant breakthroughs in Big data technologies that come in various forms and shapes.

But which of them will make it to the must-have tech stack of global businesses? And which of them should you bet on in 2022? Let’s go over the Big data tools and technologies that will rock the tech world in the current year.

What is Big data technology?

We all know that you can’t successfully navigate the business field without data-driven actions or Big data business models.

Big data is a phenomenon that owes its birth to new technological advancements. The latter emerged to analyze growing amounts of information that flows from numerous devices.

From a historical perspective, Big data is a relatively new term. It was coined in 2008 by Clifford Lynch. The expert used this term to refer to the explosive growth of information flows.

Today, it is used to denote an ocean of information that we dive into every day. It includes zettabytes of insights generated by our computers, mobile devices, and hardware sensors.

It can include all varieties of inputs from user reviews to automated traffic violation monitoring. Thus, the global datasphere has hit 64 zettabytes in 2020. IoT values has been the fastest-growing segment, followed by social media.

Why collect that much information? This data then lays the ground for AI solutions. Combined, they assist companies in effective decision-making, process optimization, and customer-centric experience.

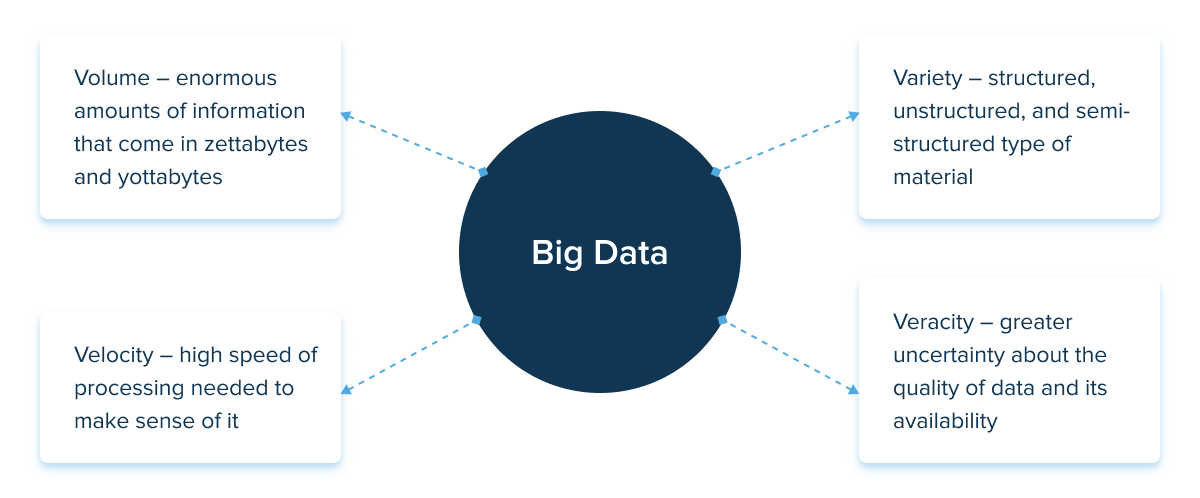

Furthermore, the main differentiators of this type of information include:

There are lots of other Vs that stand this phenomenon apart. However, these are classic pointers that are associated with this type of information. Looking at those Vs, we can already assume how hard it is to gather, clean, and process this avalanche of information.

But what exactly is Big data technology? It is what analyzes and processes insights from complex and large data sets. Unlike traditional management tools, it can handle information of a great multitude.

Technologies also include the full suite of processing techniques. The latter may include mining, storage, sharing, and reporting. Big data technologies and techniques also often complement other technologies like machine learning, deep learning, computer vision, and IoT.

Types of Big data technologies

Big data technologies cover a gamut of techniques and tools. They facilitate the effective collection, storage, and visualization of information. Mulling over the different technologies to use, you encounter the two types of solutions – operational and analytical technologies.

Operational technologies

This software type is used to deal with the vast amounts of data created by many mechanisms on a daily basis. Online orders, social media, and corporate data are some of the examples. Operational technologies also serve as a bridge between information and analytical systems.

Source: Unsplash

NoSQL systems, such as document databases, have emerged to address a wide range of operational tasks. Also, most operational systems must give some level of real-time intelligence about the active data in the system in addition to user interactions with data.

Analytical technologies

This technology type is more complex than the operational one. Analytical technologies help assess the actual performance and make crucial real-time business decisions. Therefore, this software type is useful for post-facto, advanced analytics. This workload is usually addressed by MPP database systems and MapReduce.

However, these two technology types aren’t mutually exclusive. On the contrary, companies usually implement both to navigate the terrain of hidden insights.

Best Big data technologies you must know in 2022

The spike and complexity of information cannot be addressed without advanced tools. The latter will help sift through the multitude of insights. Big data technologies and techniques carry the noise over the edge while the insights remain on the bottom. They must also handle large volumes of data at high speed, while focusing on structured and poorly structured information.

NoSQL databases

First on our list of all Big data technologies are NoSQL databases. NoSQL technology came into the fray to replace relational or SQL databases. The latter store only a specific set of structured information which ran counter to modern computing architectures.

Source: Unsplash

NoSQL ones implement scalable information storage with a flexible model. They store input with no clear structure or relationship. Instead of structured tables, these databases store heterogeneous documents, including images, videos, and even social media posts

NoSQL systems aim to leverage new cloud computing architectures that allow massive computations at affordable costs. This makes operational workloads easier to handle, and cheaper to implement. Thanks to NoSQL technology, companies can promote agility in storing, retrieving, and processing volumes of varied real-time data.

Data lakes

The massive amount of information also led to the introduction of more advanced storage methods. Data lakes are repositories that allow users to store data of any type and scale. Thus, data lakes can gather input from any business-related systems – from CRMs and ERPs to sensors and smart devices.

The benefits of these cloud Big data technologies include scalability and versatility of data formats. These, in turn, translate into reduced costs of data management. Moreover, data lakes allow for on-site processing. BI-systems, for example, help businesses to leverage in-depth analytics, predictive modeling and visualize the results.

From the end-user perspective, data lakes are projected to blossom across healthcare settings. The latter create a breeding ground for this technology due to the versatility of medical records. Thus, Amazon released its HealthLake, which is a HIPAA-eligible product. It caters to healthcare and life sciences companies and provides a holistic view of health records for query and analytics at scale.

Artificial intelligence

To implement Big data, companies need an automating tool capable of wading through vast amounts of information. Artificial intelligence is exactly the type of tool that helps process the input. The unique synergy of AI and Big data forms unprecedented analytic capabilities.

Research reveals that this duo can automate almost 80% of all physical work, 70% of data processing work, and 64% of collection tasks. It means that artificial intelligence can amplify all cycle phases, including gathering, storage, and retrieval. Artificial Intelligence generates new analytical models that are no longer tedious and manual. Once statistical models like SQL, analysis has now merged with computing to become AI and machine learning.

Using natural language processing, AI can distinguish information types and find possible links between datasets. It can also recognize common human error patterns, detect and address potential data issues. But the relationship between the two is also mutually beneficial. The more data an AI algorithm has, the more accurate the outcome it can achieve.

Predictive analytics

Next on our list of Big data tools and technologies is predictive analytics. The ability to look into the future and predict outcomes are the main treasures of Big data. Predictive analytics, in its turn, feeds on information to model prediction and prepare businesses for future changes.

Predictive analytics is indispensable across various industries. It powers fraud detection systems, ad campaigns, healthcare precision and Big data. This analytics type is crucial when a company needs to find hidden patterns, segment products, or forecast sales.

Prescriptive analytics

The global prescriptive analytics market is projected to grow from $4.9 billion in 2021 to over $14.3 billion by 2026. The ever-changing dynamics of business operations are what drive the market into the future. Prescriptive analytics relies on Big data and artificial intelligence. Combined, they recognize and recommend an action that can be used to deal with future scenarios.

Amazon, for example, turns to prescriptive analytics. The latter helps the retailer to make similar recommendations for the shopper based on the original purchase. Also, prescriptive analytics can help a company discern potential future opportunities and respective outcomes. As more information becomes available, prescriptive analytics can adjust its predictions.

Blockchain

Blockchain remains the technology of the hour for years on end. It is touted as the savior of legacy infrastructures in banking and IT. But what is this Big data technology? Applied to the information field, this technology can reduce storage costs for ledger-based transactional data.

Using traditional cloud storage providers like AWS or Azure to store these massive data lakes is expensive. In comparison to AWS, the pilot project offered by Storj and other decentralized storage providers revealed up to a 90% cost savings.

Source: Unsplash

Blockchain can also increase the accessibility of analytics tools by decentralizing the technology needed. It can make information exchange more secure and easy, with minimum infrastructural costs associated with it. This concoction augurs well for data quality since validated data generated via blockchain technology comes structured and immutable.

R programming

Another rising technology is the computer language of R. It is mainly used for statistical computations and visualizations. R offers a set of algorithms geared towards machine learning. More specifically, it fuels time series analysis, classification, clustering, linear modeling, and others.

It is a leading programming language of data science, addressing the challenges of Big data processing. Thus, R can collect the input through web scraping and other means. It can also perform data cleansing and convert raw figures into the desired format.

Hadoop

Hadoop is one of those Big data tools and technologies that is known to any data science company. It is an open-source software environment used to develop data processing applications that run in a distributed computing environment.

Hadoop offers massive storage to any input type. It also boasts unrivaled processing power and the ability to handle concurrent tasks. In recent years, the Hadoop framework has been extensively used by the largest technology businesses such as Facebook and Google. It has also made it to the tech stacks of leading financial and insurance companies, research institutes, and other companies.

Tableau software

Tableau is one of the best Big data technologies for visualizing business analytics. This tool can also be connected to files, relational sources, and vast sources to collect and process information. Tableau software allows companies to analyze large amounts of information fast and cost-effectively.

Source: Unsplash

The main differentiator of this tool is its ability to retrieve materials from dozens of places. Thus, engineers can load anything from an excel file to a whole database to get a full picture. Besides, Tableau can handle any format – from Excel to Oracle.

Kubernetes

Kubernetes is one of the cloud Big data technologies developed by Google. Essentially, its main use lies in vendor-agnostic clustering and container management. In its early days, Kubernetes wasn’t fit for managing Big data workloads.

Today, new advancements allow this tool to support large-scale infrastructures. Kubernetes operators like Apache Kafka and Cassandra make it easy to deploy Big data solutions. They also unlock the portability potential by deploying Big data software in a variety of environments.

ElasticSearch

Last but not least on our list of cloud Big data technologies is ElasticSearch. It is a fast, horizontally scalable search and analytic engine. It is a hybrid of a NoSQL database and can be applied to all types of data. ElasticSearch can analyze large pieces of information in real-time.

Global companies and especially budding businesses rely on ElasticSearch to perform log analysis, full-text search, as well as business intelligence and process monitoring. It can perform extremely fast searches and can be scaled up to thousands of servers.

The future of business intelligence

Real-time accessibility allows companies to process incoming information and generate actionable insights. Big data is what drives strategic decision-making on a real-time basis and gives a competitive edge.

In the coming years, we will certainly see more trailblazing technologies. The latter will help create new growth opportunities and an ever-evolving data flywheel. In 2022, artificial intelligence, predictive analytics, and blockchain seem to steal the show as robust processing tools. Hence, the power of decision-making will be in the hands of this trinity.

Enhance your business with Big Data technologies

Need a Big data engineering partner? Contact us and we’ll get back to you soon to discuss your business needs and goals set.