E-commerce and retail industry have changed drastically in recent years. Young, tech-savvy shoppers are demanding smarter shopping experience where the journey from discovery of a product to check-out is as short as possible.

To deliver such experience, companies are turning to AI for help. The possibilities of offering a better shopping experience with AI, both from a backend and a frontend perspective, are enormous.

Retail is an industry that is very well suited for AI because it’s rich with data, and more is generated every day. According to a global research study done by Zebra in 2017, 73% of all retailers consider managing big data as a critical business move. Managing big data, especially the quality of data, is really essential for future success because only quality data can be used for building accurate machine learning models and training AI.

AI can be applied to different parts of e-commerce. Chatbots can automate customer service tasks and cut costs drastically. Deep learning algorithms can manage product labeling without the help of humans, and thereby improve searchability of products. AI can also serve as a digital shopping assistant offering personalized product recommendations.

Computer vision is becoming a disruptive force in the retail industry. The technology enables such features as image search, virtual fitting room, tracking the movement of people as they move around a store and much more. By 2021, it’s estimated 75% of all retailers are planning to invest in some sort of cameras and video analytics to enhance the customer experience, both in-store and online.

In this article, we are going to discuss one of the hottest applications of computer vision in retail – visual search.

What is Visual Search and Why it is Important

Visual search is the ability to search for content in visual media. A typical visual search engine is able to identify items in an image or a video and search across a catalog to find the same or similar items. Essentially, it allows a customer to search for an item (or something similar to it) by simply uploading a picture of it. For example, you see a nice coat an influencer is wearing on social media. By taking a screenshot of that picture, you can later upload it to a retailer of your choice to find the same coat or a similar item.

Images are often the starting point for most customer journeys. Tech-savvy consumers, millennials especially, are interacting with pictures to discover products that are new to them or to find items that they have seen in real life. The reason is that it’s much more intuitive and more versatile compared to text-based search. Modern consumers are constantly taking pictures of things they want in a daily life or on social media, but there is no easy way to find a similar item for themselves. This makes every image an opportunity to engage your audience and convert them into customers. Visual search is making a splash because it leverages that opportunity. It delivers a seamless, tailored experience by removing the barrier that comes with not knowing anything about a product or not being able to describe it properly.

Big corporations have been experimenting with visual search for a few years now. Last year, Pinterest launched a few visual discovery tools. Pinterest Lens allows the consumers to “discover ideas without having to find the right words to describe them” by uploading a picture of something they are interested in to the platform. Instant Ideas feature lets customers get more similar pins by tapping one single button. Pinterest also introduced a “shop the look” feature that lets customers shop for clothes similar to the ones in the pin they like.

eBay launched a visual search tool on their app that allows customers not only use pictures that are saved on their phones to find similar items on eBay, but also use photos from any social platform to shop for similar objects on eBay. This is a good example of omnichannel technology that is demanded more and more by consumers. It drives conversion and removes a lot of the friction in the buying process. And this is only the beginning. According to Gartner, by 2021, early adopter brands that redesign their websites to support visual and voice search will increase digital commerce revenue by 30%.

Another reason behind the popularity of visual search is the way it simplifies shopping on mobile devices. Many retailers are getting the majority of their revenue from sales on mobile devices. Asos reports that in 2017 70% of their UK orders came from mobile devices. With the implementation of their visual search feature, they expect the sales to grow by 30%-35%. Pinterest states that 85% of all their searches are done on mobile devices, and 87% of their users bought something they found in the pins.

How Visual Search Drives Real Business Results

Visual search does not only deliver better customer experience; it drives real business results. With features like “shop the look”, companies have increased the average order size by 20%. Because the friction between “see” and “buy” has been minimized, consumers find it easier to purchase what they see on the internet.

Visual search technology can increase conversion rates by offering visually similar products in out of stock alternatives and in recommendations.

Visual search has also simplified the backend part of e-commerce through automatic product tagging. It’s well-known that tagging products with as many descriptive labels as possible is important in order to get the products found. The problem with product tagging is that it’s highly time-consuming. Automatic product tagging makes it easier to organize and optimize the whole product catalog, i.e. keep track of stock levels and bestselling items across multiple channels.

In addition to visual search engine embedded in the apps of the leading retailers, many are investing in visual search chatbots. The chatbots function as digital personal assistants with whom you can chat and send pictures of items you want to buy. Additionally, chatbots can function as virtual customer service personnel and tackle as much as 80% of routine questions, according to IBM. This equals to a drop in cost per inquiry from $15-$200 (depending on the location) to almost $1.

Technology Behind Visual Search Engine

A successful visual search engine must be able to accomplish the following image processing tasks: multi-object detection and image similarity search.

At InData Labs we are using Convolutional Neural Networks to teach computers what different objects look like and identify important objects within a photograph all by itself.

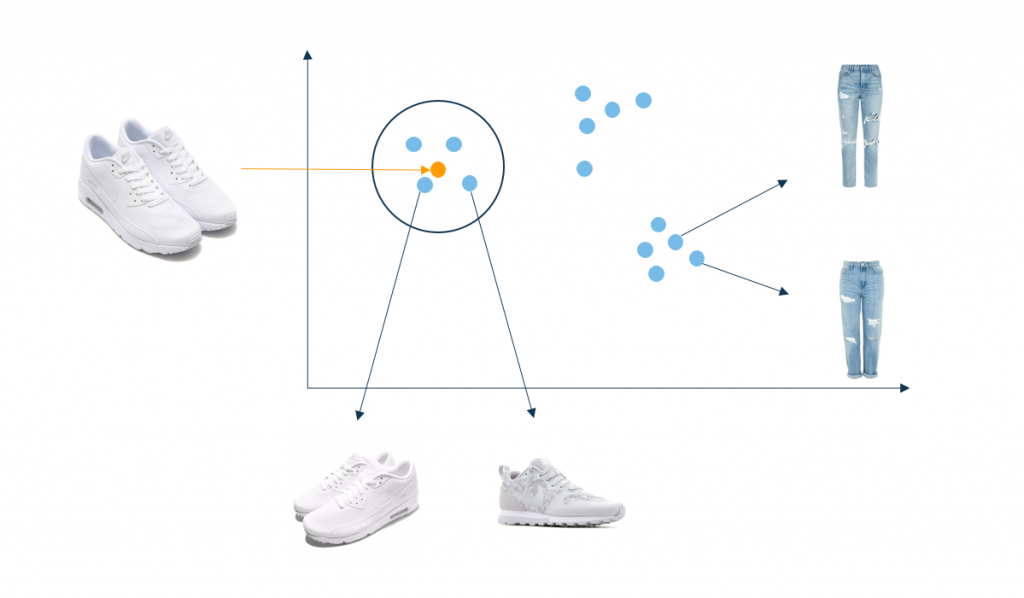

After detecting and cropping all fashion items from a query image, we use another neural network to convert each cropped image into a vector.

We also need to build a vector representation of every image in the catalog, which may generate thousands or millions of vectors depending on the size of a shop. The result will be a rich multi-dimensional vector space where similar images have feature vectors closer together than images with totally different content.

Thus for a data scientist, the problem of finding similar images in a catalog of products is equal to finding the nearest vectors in the multi-dimensional space.

If simplified the idea can be illustrated like in the image below:

What’s Next

Visual search engines still struggle to process images in the way we expect them to, but it’s safe to say that the technology provides an opportunity to monetize parts of the market that previously have been difficult to capture, and we’ll see much more of visual search adaptation in the future.

Start Your Computer Vision Project with InData Labs

Have a project in mind but need some help implementing it? Schedule an intro consultation with our machine learning engineers to explore your idea and find out if we can help.