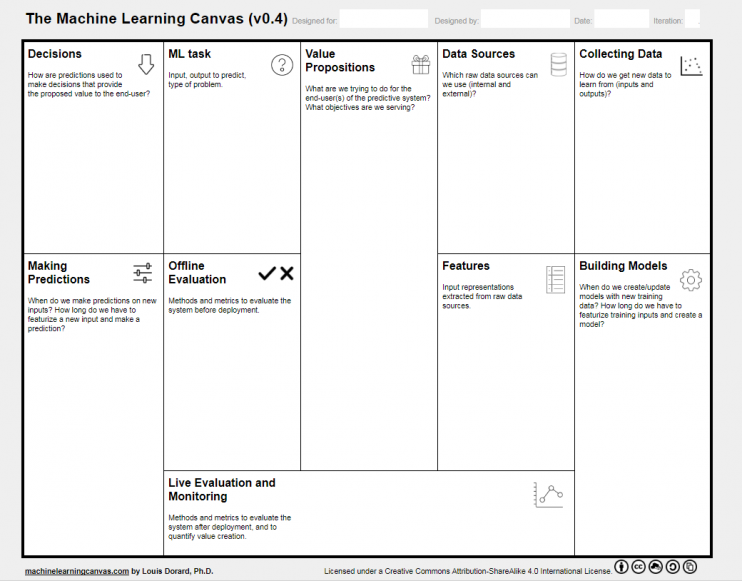

Since the release of Osterwalder’s Business Model Canvas in 2008 new canvases for specific niches have appeared. Today we have canvases for creating new gamification models, canvases for event design, for shaping a corporate culture and even for developing machine learning applications.Machine Learning Canvas is a template for designing and documenting machine learning systems. It has an advantage over a simple text document because the canvas addresses the key components of a machine learning system with simple blocks that are arranged based on their relevance to each other. This tool has become popular because it simplifies the visualization of a complex project and helps to start a structured conversation about it.

In this article, I’ll describe each block of the Machine Learning Canvas with examples and practical tips from our data scientists. Without further ado, let’s get started!

Value Proposition

In the heart of the canvas, there is a value proposition block. The main questions to answer here are:

- Who is the end user of the predictive system?

- What are we trying to do for the end user of the system?

- What objectives are we serving? Why is it important?

Only after answering these ‘who’, ‘what’ and ‘why’ questions, you can start thinking about a number of the ‘how’ questions concerning data collection, feature engineering, building models, evaluation and monitoring of the system.

Learning from Data

Let’s continue by looking at the right-hand side of the canvas that is dedicated to learning from data. It consists of the following blocks: data sources, collecting data, feature engineering, building models.

Data Sources

Which raw data sources can we use (internal and external)?

This block poses a question about which raw data sources we can use. This step does not require specifics of the data you are planning to collect but it forces you to think about the data sources you are going to use. Some examples of data sources that you can consider are internal databases, open data, research papers in your domain, APIs, web scraping, output from other machine learning systems, etc.

Collecting Data

How do we get new data to learn from (inputs and outputs)?

This block addresses a problem of collecting and preparing data to learn from. Machine learning project cannot exist without a training dataset — preferably, a large dataset with lots of labeled data. It means that your learning system will need both example inputs and their desired outputs. Only after learning from data that is labeled with the correct answer, your ML model can be used to make predictions on new data.

Often, data is not initially available in a labeled form, and it’s important to have a plan for collecting and preparing a dataset that would be representative of the real-life data that the model will use as input to make a prediction. Only if the data provided as input is of good quality, then the learning algorithm developed will be of good quality as well.

For example, if you want to build an algorithm for predicting whether an Instagram account is fake or real, firstly you will need humans to label accounts as either real or fake. It’s not a complex task for a human to do, but depending on how much data you need, this can get expensive because it requires many hours of manual work.

There are, however, ways you can acquire data in a more cost-effective manner. Instagram, for example, allows its users to report images and profiles in their feed as spam. Users label data for Instagram algorithms free of charge, approving the best posts with likes and reporting inappropriate content as spam. Instagram then uses the feedback to fight fraud and spam accounts and to make the feed personalized for every customer.

It’s important to note that the most accurate machine learning systems to date are those that use a “human-in-the-loop” approach. This method leverages both machine and human intelligence. When the machine isn’t sure it’s making the right prediction, it relies on a human, then adds the human answer to its model. “Human-in-the-loop” approach helps to get new data of good quality and to improve the accuracy of your model over time.

There are also projects that you can start without a labeled dataset. These are projects where you solve unsupervised machine learning tasks like anomaly detection or audience segmentation (clustering).

Feature Engineering

Input representations extracted from raw data sources.

Once you have the labeled data, you need to convert it to a format that is acceptable to your algorithm. In machine learning, the process is called feature engineering. The initial set of raw features can be redundant and too large to be managed. Therefore, data scientists need to select the most important and informative features to facilitate learning. Feature engineering requires a lot of experimentation and the combination of automated techniques with the intuition and domain expertise.

‘We use simple machine learning techniques like gradient boosting or linear regression to select and interpret features. Coefficients of regression models automatically provide estimates of feature importance. We train a model several times with different hyperparameter configurations to make sure that rankings of the features are reliable and don’t change significantly from experiment to experiment. ‘ – says Eugeny, Data Scientist at InData Labs.

If you are a domain expert (not a data scientist) you should specify which features are the most important from your point of view, this will be very helpful for data engineers in the future. If you find yourself listing too many features, try combining them into feature families. This will make optimizing the model easier.

Many machine learning consulting experts believe that properly selected features are the key to effective model construction. So be sure to elaborate on this block.

Building and Updating Models

When do we create/update models with new training data? How long do we have to featurize training inputs and create a model?

This block addresses the question of when you create/update your model with new data. There are primarily two reasons to continuously update your model. Firstly, new data improve your model. Secondly, it allows you to capture any changes in the phenomenon you are working with. How often your model needs an update will depend on what you are trying to predict.

If your model predicts sentiment of a phrase, there is no need for updating it daily or weekly. The structure of texts changes very slowly or doesn’t change at all. So you might plan an update when you get additional training examples to improve your model.

On the other hand, there are models that work in a fast-changing environment. For example, if you are making predictions about your customer behavior you should constantly check how the model works for the new users. A significant change of the size or the structure of your audience may require an update of the model with new data.

Sometimes updates take more time and more processing power than you actually have. In that case, you need to compromise between cost, time and the quality of your model.

The key takeaway from this block is that your model is not built once and forever, it should change over time, just like all things in the world.

Making Predictions

The left-hand side of the canvas is dedicated to making predictions and consists of the following blocks: machine learning task, decisions, making predictions, offline evaluation.

Machine Learning Task

Input, output to predict, type of problem.

This block aims to define a machine learning task based on input, output, and type of problem. The most common machine learning tasks are classification, ranking, and regression.

If you are predicting what something is, you have a classification task. The output to predict will be class labels. In binary classification, there are two possible output classes. In multi-class classification, there are more than two possible classes. The problem of predicting fake Instagram account that we discussed earlier is an example of binary classification.The input data could include profile name, profile description, number of posts, number of followers, and the output label is either “fake” or “not fake.”

If you are trying to predict a numerical value (an output is a numeric score), you are dealing with a regression task. For example, when we try to predict real estate prices on future days given price history and other information about the building and the market, we can treat it as a regression task.

If you are trying to determine the right thing for a given person or situation, then you are solving a ranking task. Building a movie recommender system or a search engine often involves solving a ranking task. The output is a ranked list of items for each user.

Decisions

How are predictions used to make decisions that provide the proposed value to the end-user?

Before the training data is collected and a model is built, you and your team are forced to articulate how the predictions will be used to make decisions that provide value to the end users. This is a very important question for every ML project, as it’s closely connected with the profitability of the project. As discussed earlier a successful machine learning system should generate additional value for its users. This block serves as a checkpoint of that criteria.

The value of predictions is questionable unless they are used for making decisions. Like ideas that never transform into action, predictions without interpretation bring no value, and it doesn’t matter how accurate they are.

For a machine learning system to impact decision-making process in a truly meaningful way, predictions must be delivered on time. A common mistake that many companies make is building a machine learning model that is supposed to make predictions online and then discovering that they can’t get real-time data for it. Be aware of time when planning your ML project and make sure the right data is available at the right time to deliver predictions that you can act upon.

An output of a machine learning system is not always the result that the end-user is looking for. For example, a churn prediction model helps to predict who is likely to churn within a month, but what the end users want is churn preventionstopping customers from churn in a cost-effective manner). There are a number of steps that should be done to get from churn prediction to churn prevention, and the owner of the ML project must be able to describe these steps up front. If you can’t explain how the predictions will be used to make decisions that provide value to the end users, stop here, and don’t move forward unless you find the answer.

Making Predictions

When do we make predictions on new inputs? How long do we have to featurize a new input and make a prediction?

This block addresses the following questions: ‘When do we make predictions on new inputs?’ and ‘How long do we have to featurize a new input and make a prediction?’

Some models allow updating predictions for every user separately. In this case, you may consider several approaches to model update:

- new predictions are made each time a user opens your application

- new predictions are made on request, a user may ask for an update by clicking a special button in your app

- predictions update is triggered by some event, for example when a user submits new important information

- new predictions are made on schedule for all users, for example, once a week.

There are also systems, where predictions for different users are interrelated, and you can’t make an update for one user without updating the whole system. Such universal updates take more time, and more processing power, and therefore require more planning. The time you need for predictions update must comply with the desired frequency of updates.

For example, if you are going to build a movie recommender system, first think how often recommendations should be updated on new inputs to be relevant and valuable for the users. Then you should check if this is possible given the speed of your ML system is limited. If you want to make updates daily and an update takes two hours that’s great news for you. If you think your recommendations are only valuable if updated every hour and an update takes two hours you need to compromise between cost, time and the quality of your model again.

Offline Evaluation

Methods and metrics to evaluate the system before deployment.

This block addresses a problem of the model’s performance evaluation before it goes into production. It’s important to plan methods and metrics to evaluate the system before deployment. Without a validation metric, you won’t be able to select the model that makes the best predictions and to answer, whether a model is good enough and when it’s ready to go to production. So make sure that you have metrics that are representative of what you are trying to achieve.

‘To evaluate a supervised machine learning algorithm we usually use k-fold cross-validation. This method implies training several ML models on (k-1) subsets of the available training data and evaluating them on the complementary subset reserved for evaluation. This process is repeated k times, with different validation data each time.This technique helps to avoid overfitting while using all available data for training.’ – says Eugeny, Data Scientist at InData Labs.

Another method of offline evaluation is the offline evaluation on live data. For example, if you are building a model for predicting real estate prices, you only have to wait for the real sales data to be available and compare your predictions to the live data.

Live Evaluation and Monitoring

Methods and metrics to evaluate the system after deployment, and to quantify value creation.

The last part of the canvas covers the online evaluation and monitoring of the model. This is where you’ll specify metrics to monitor the performance of your system after deployment (tracking metrics), and to measure value creation (business metrics). Aligning these two types of metrics will make everyone in your company happier. Ideally, there should be a direct relation between the quality of the model and business results.

The online phase has its own testing procedures. The most commonly used form of online testing is A/B testing. This method is rather simple, but it has some tricky rules and principles that you need to follow in order to set it up and interpret the results correctly.

A promising alternative to A/B testing is an algorithm called multi-armed bandits. If you have multiple competing models and your goal is to maximize the overall user satisfaction, then you might try running a multi-armed bandit algorithm.

When the model goes into production it interacts with real users, who can also provide a lot of valuable information about the accuracy of your model. You may collect this kind of live feedback conducting customer interviews or analyzing reviews and support requests.

You should also continue tracking the model’s performance on the validation metric on live data and make model updates before the quality of the model becomes unsatisfactory for the end users.

Thinking about live evaluation and monitoring methods upfront allows for better project planning because testing mechanics form an integral part of the machine learning system and require additional engineering work.

Conclusion

At InData Labs, we have been utilizing the machine learning canvas with most of our clients. In our experience, this tool proved to be useful for developing and structuring even the most complex AI projects.

I’d like to wind up by thanking Louis Dorard for his excellent thinking behind ML project principles and I would highly recommend you visit his blog where he shares his experiences in the field of machine learning.

This concludes our introduction to machine learning canvas. I hope it was helpful for you and wish you good luck with your future projects.