Large Language Models (LLMs) have quietly become the engines behind some of today’s biggest business shifts. These products of top LLM companies writing emails, summarizing reports, powering chatbots, and helping teams move faster with fewer resources. As more companies explore what LLMs can do, a new wave of providers is stepping in—offering everything from plug-and-play APIs to full-scale, secure LLM development for the enterprise.

In this article, we highlight the top LLM companies leading the way in AI development, with a focus on firms that provide real business value—not just research breakthroughs. From enterprise-grade platforms to tailored consulting services, these are the players shaping the future of large language model use cases in practical, scalable ways.

InData Labs

Cyprus-based InData Labs has made a name among leading LLM companies by doing the unglamorous work really well—turning large language models into practical tools businesses can actually use. They don’t just show up with a pre-built solution; they dive into your data, your use case, and your constraints to figure out what will actually work.

Whether it’s training a custom model, building secure infrastructure, or handling AI chatbot development that reflects your brand—not just like a generic assistant—the team is hands-on from day one. They also handle prompt engineering, integration, and long-term support, so you’re not left patching things up six months down the road.

What makes them stand out? It’s the way they work with clients—as collaborators, not just vendors. If you want an LLM partner that brings both technical depth and a sense of ownership, InData Labs is one of the most dependable names out there.

OpenAI

OpenAI hardly needs an introduction—it’s the company behind ChatGPT LLM and one of the biggest names in the large language model world. What makes them stand out isn’t just the research, but how easy they’ve made it for businesses to start using powerful LLMs in real-world scenarios.

Through OpenAI’s APIs and enterprise tools, companies can plug natural language capabilities into everything from customer chatbots to internal documentation workflows. Whether you’re automating support, generating content, or building something more creative, OpenAI offers a fast track to deployment—without the need to reinvent the wheel.

Their reputation as one of the top LLM API providers comes from more than just reach. It’s the balance of innovation and practicality that keeps businesses of all sizes coming back—and the fact that OpenAI keeps pushing the envelope while staying mindful of responsible AI use.

Anthropic

A brainchild of researchers that left OpenAI, Anthropic is now contending the place its “ancestor” has taken in the LLM market. The company’s flagship, Claude, can be characterized with one word – control.

Claude was programmed to strictly obey the inputs, stay within clear ethic boundaries and do not be distracted from his task. These characteristics made this model very popular in finance, law, and healthcare.

Anthropic’s sharp focus on security, alignment, and transparency sets it apart from the crowd. For organizations looking to integrate generative AI without giving up peace of mind, Claude is one of the smartest choices out there.

Cohere

Cohere is a Toronto-based LLM AI company specializing in enterprise-ready language models with a focus on retrieval-augmented generation (RAG), multilingual capabilities, and private deployments. Their platform helps businesses build powerful AI systems for semantic search, summarization, document classification, and conversational AI.

What makes Cohere stand out among large language models companies is its emphasis on flexible deployment. Clients can choose between public cloud, LLM cloud providers, or on-premises setups—ideal for organizations with strict data compliance requirements. Cohere’s models are known for being lightweight, performant, and cost-effective, even at scale.

As one of the more developer-friendly LLM providers, Cohere offers robust APIs and tooling to help teams build and iterate quickly. Their combination of performance, control, and customization makes them a strong pick for mid-to-large organizations moving into Generative AI.

Mistral AI

Mistral AI, based in France, stands out for keeping things open. While many LLM providers lock their models behind APIs or commercial terms, Mistral publishes theirs for anyone to use. Their models—like Mistral 7B and Mixtral—come with open weights and no strings attached. That approach hits home with teams that want flexibility.

Whether you’re building something specialized or need to meet strict compliance rules, it helps to start with a model you can actually see, tweak, and run locally.

If you’re looking for full control without reinventing the wheel, Mistral makes a strong case. It’s AI that doesn’t hide behind closed doors.

Aleph Alpha

Based in Germany, Aleph Alpha is gaining serious ground in the world of LLMs—especially among organizations that care about data sovereignty and compliance. Unlike many of its global peers, Aleph Alpha builds AI with European values and regulations in mind.

Their flagship model, Luminous, supports multiple languages and comes with deployment options that fit a range of security needs, including both cloud and on-premise setups. That flexibility makes it a solid choice for sectors like government, healthcare, or defense—where control and privacy aren’t optional.

What really sets them apart? Transparency. Aleph Alpha invests heavily in explainability tools, helping users understand how the model reaches its conclusions. For companies looking for responsible AI that doesn’t treat Europe as an afterthought, this is a name worth knowing.

AI21 Labs

AI21 Labs, based in Tel Aviv, is a standout among top AI LLM companies for its focus on language models that support advanced reading comprehension, reasoning, and content generation. The company’s flagship models, such as Jurassic-2, power a range of writing and knowledge-based applications for enterprises.

AI21 Labs serves as both a LLM API provider and a partner in end-to-end solution delivery. Their technology is used in everything from real-time writing assistants to document analysis systems, offering rich context understanding and language fluency.

With a growing client base in publishing, finance, and legal services, AI21 Labs is considered one of the best LLM companies for organizations looking to scale up human-like writing, summarization, and research capabilities with AI.

Google DeepMind

DeepMind, Google’s AI research division, is behind some of the most advanced breakthroughs in large language model development. Their Gemini models (formerly Bard), now integrated into Google Cloud, are built to support code generation, scientific reasoning, and multi-modal interactions.

Known for its rigorous research foundation, DeepMind is one of the biggest LLM companies shaping the future of AI across both academia and enterprise use. From helping scientists accelerate protein discovery to powering tools that enhance business productivity, their models are optimized for high-stakes, complex reasoning tasks.

As part of Google’s ecosystem, DeepMind also plays a role as one of the most influential LLM cloud providers, offering access to its models via Google Cloud Vertex AI—making it easier for businesses to integrate LLM capabilities into their workflows securely and at scale.

Meta AI

Meta isn’t just in the social media game anymore—it’s also behind one of the most widely used open-source LLM families: LLaMA. These models give developers and researchers something rare in the LLM world—freedom.

You don’t need to go through APIs or stick to someone else’s rules. Teams can download the models, experiment, customize, and run them on their own terms. That kind of openness is especially valuable if you’re working on in-house tools or trying out something unconventional.

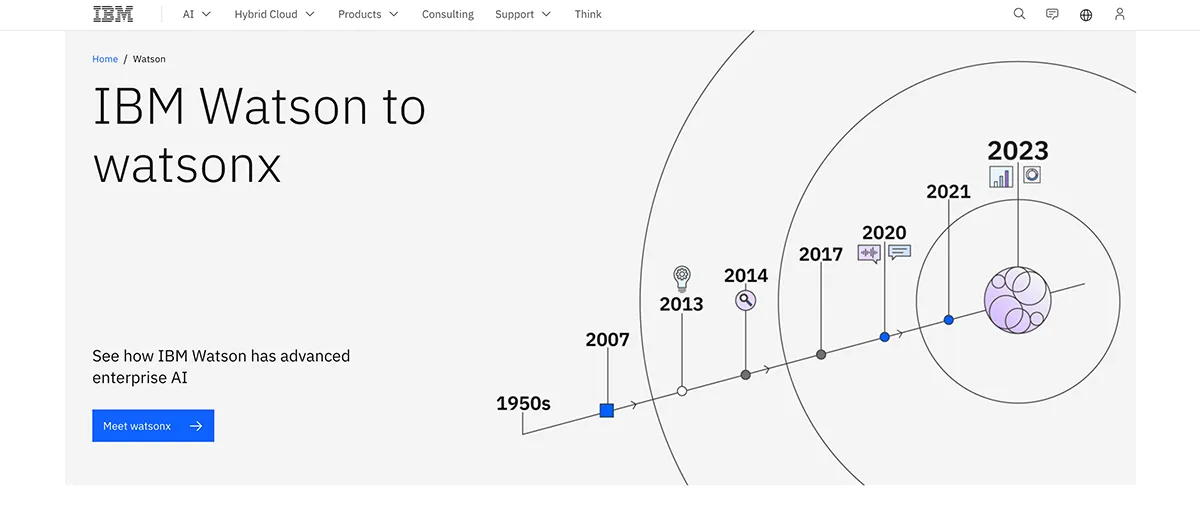

IBM Watson

IBM Watson has evolved from a question-answering system into one of the most enterprise-ready platforms for LLM AI companies. Now integrated into watsonx, IBM’s next-generation AI and data platform, it supports the full lifecycle of large language model development—from training and fine-tuning to governance and compliance.

What sets IBM apart is its strong emphasis on LLM security and responsible AI. With built-in tools for model risk management, explainability, and auditability, Watsonx.ai appeals to highly regulated industries like finance, government, and healthcare. Organizations can use pre-trained models or bring their own, tailoring LLMs to specific domains without compromising on privacy or control.

Stability AI

Stability AI made headlines with Stable Diffusion, but that was just the beginning. They’ve since moved into the LLM space with models like StableLM—open, accessible, and built for real-world use.

What makes them stand out? Transparency. Their models aren’t locked behind paywalls or restricted licenses. Developers can download, fine-tune, and use them to build large language models apps—no red tape, no corporate friction.

Microsoft Azure AI

Microsoft didn’t just partner with OpenAI—they built an entire launchpad around it. Through Azure OpenAI Service, teams can plug models like GPT-4 straight into their tools and workflows, all while staying within the familiar Microsoft ecosystem.

But it’s more than just easy access. Azure wraps those models in a full enterprise environment: compliance support, usage analytics, customizable endpoints, and serious security baked in. That’s a big deal if you’re working in healthcare, banking, or anywhere else that doesn’t have room for risk. Many companies use Azure to prototype LLM applications quickly and then move into production without switching platforms.

Amazon Bedrock (AWS)

Amazon Bedrock, a relatively recent addition to the AWS family, offers a practical way for teams to build and run LLM-based tools—without getting tangled up in server setup or infrastructure headaches.

What sets it apart? Instead of locking you into just one model, Bedrock gives you access to several top-tier LLMs, including Claude from Anthropic, models from Cohere and AI21 Labs, plus Amazon’s own Titan line. This flexibility is a big deal, giving the potential clients an all-in-one solution to most of their problems.

NVIDIA AI

While NVIDIA isn’t a traditional large language model development company, its influence on the large language model ecosystem is unmatched. As the backbone of modern LLM infrastructure, NVIDIA provides the GPU hardware and software tools that power most training and inference tasks for leading models—including those from OpenAI, Meta, and Cohere.

NVIDIA’s NeMo framework offers powerful tools for building, customizing, and deploying LLM-based solutions, and its pretrained Megatron models have been used as the foundation for several major large language models.

Business benefits of LLM integration

Ask anyone, and you’ll hear the same thing: there’s too much to read, too much to write, and never enough time. One of the key LLM benefits is how they eliminate this burden.

Here’s what that looks like in practice:

They take the grunt work off your plate

Say your team spends hours every week answering the same kinds of support emails or writing basic internal documentation. With an LLM in place, a good chunk of that can be handled automatically. It’s not about replacing people—it’s about freeing them up to do the work that actually needs a human brain.

Source: Unsplash

They help you catch patterns you’d miss on your own

Digging through surveys, support tickets, or feedback forms is a slog. But buried in there? Insights you’d probably want yesterday. With an LLM, you can spot the top 10 complaints, see what people keep asking for, and figure out what’s working (or not). Less noise, more signal.

They make it feel more personal

You know when a chatbot actually understands your question—and doesn’t just spit out a canned line? That’s probably an LLM behind the scenes. They adapt. They learn how users talk. And they help your apps respond like a real person, not a rule-based script.

They keep things moving at scale

Launching new product pages in five languages? Need 50 versions of ad copy? Want A/B test variants but don’t have a content team on standby? LLMs can crank out decent drafts fast. You’ll still want a human touch to polish it—but the heavy lifting’s done.

They make compliance less of a headache

Nobody loves checking policy docs or combing through contracts for red flags. But someone has to do it. With the right training, an LLM can help flag risky clauses, surface missing language, or identify sensitive info before it slips through. It’s like a second set of eyes that never gets tired.

They help developers ship faster

Developers use LLMs for everything from writing unit tests to debugging gnarly functions to spinning up boilerplate code. And it’s not just hobbyists—many top LLM providers now offer dev-focused tools that plug right into your IDE. Less grunt work, fewer roadblocks, faster releases.

Source: Unsplash

How to hire LLM providers

Choosing an LLM development company or AI consulting partner for your project isn’t just about big names or slick pitches. It’s about finding a team that understands your space and builds with your real needs in mind. Here’s what to watch for:

-

Pick someone who knows your industry

If they’ve never worked in your domain, expect a steep learning curve. Good large language model companies already understand your jargon, workflows, and challenges—which means fewer surprises and better results.

-

Ask how they plan to do the work

It’s easy to say “we’ll build something scalable.” Harder to explain how. Ask about training data, evaluation, and deployment. If they dodge those questions, keep looking.

-

Think beyond version one

A solid launch is great—but can their system grow with you? Choose a partner who’s built LLM tools that scale without breaking under real-world pressure.

Source: Unsplash

-

Don’t gloss over security

If sensitive data is involved, security can’t be an afterthought. The right team will be upfront about how they handle access, logging, and compliance.

-

Know what you can customize

Some use cases need a lightweight, fine-tuned model—not a giant black box. The right partner should adapt the model to your business, not the other way around.

-

Support shouldn’t end at deployment

Things will change. Bugs will happen. Make sure your vendor doesn’t disappear after go-live—and that they’re ready to help when things need tweaking.

Conclusion

The LLM landscape is evolving fast, with new tools, providers, and use cases emerging almost daily. Whether you’re building internal tools, customer-facing apps, or industry-specific AI solutions, choosing the right partner can make all the difference.

From open-source platforms to full-service LLM development companies, there’s no shortage of options—only the challenge of picking the one that fits your goals. As with any tech investment, the best results come from aligning capabilities with real business needs. Take the time to evaluate your options, and don’t be afraid to go beyond the usual names.

FAQ

-

There’s no one-size-fits-all answer—it depends on what you’re building. Among large language models companies OpenAI leads in general performance, but if you’re looking for tailored solutions and real hands-on support, companies like InData Labs stand out for their flexible, business-focused approach.

-

Models like GPT-4 (OpenAI), Claude (Anthropic), and Gemini (Google DeepMind) are currently among the most capable in terms of reasoning, output quality, and versatility. That said, the “best” model really depends on your use case—some are stronger at code, others at dialogue or summarization.

-

LLM service providers include a mix of big tech (like Microsoft Azure AI, AWS Bedrock, and Google Cloud), research-first players (Anthropic, Meta, DeepMind), and smaller specialists like Cohere, AI21 Labs, or InData Labs. Some offer APIs, others full development support or open-source models.

-

GPT-4 still leads in adoption due to its wide capabilities and early market presence. But open-source models like LLaMA (Meta) and Mixtral (Mistral) are gaining traction fast—especially among teams that want more control or need to meet specific compliance and hosting requirements.