The role of neural network in artificial intelligence is paramount. Thanks to human-like reasoning, machines have gained unprecedented capabilities. AI neural networks now can predict stock markets, recognize voices, make movie recommendations, detect malignant tumors, and perform other tasks that were long a human responsibility.

With that said, let’s dabble into the basics of neural networks and how they relate to other AI components. We’ll also go over the use of neural networks in artificial intelligence that make a difference today.

What are neural networks in artificial intelligence?

Artificial neural networks or ANN are an artificial intelligence technique that is computationally designed to imitate how a human brain works. It is made up of layers of artificial neurons. A neural network will take some input and based on the values, it will output a prediction. This prediction can be a classification, an identification, or a regression.

Source: Statista

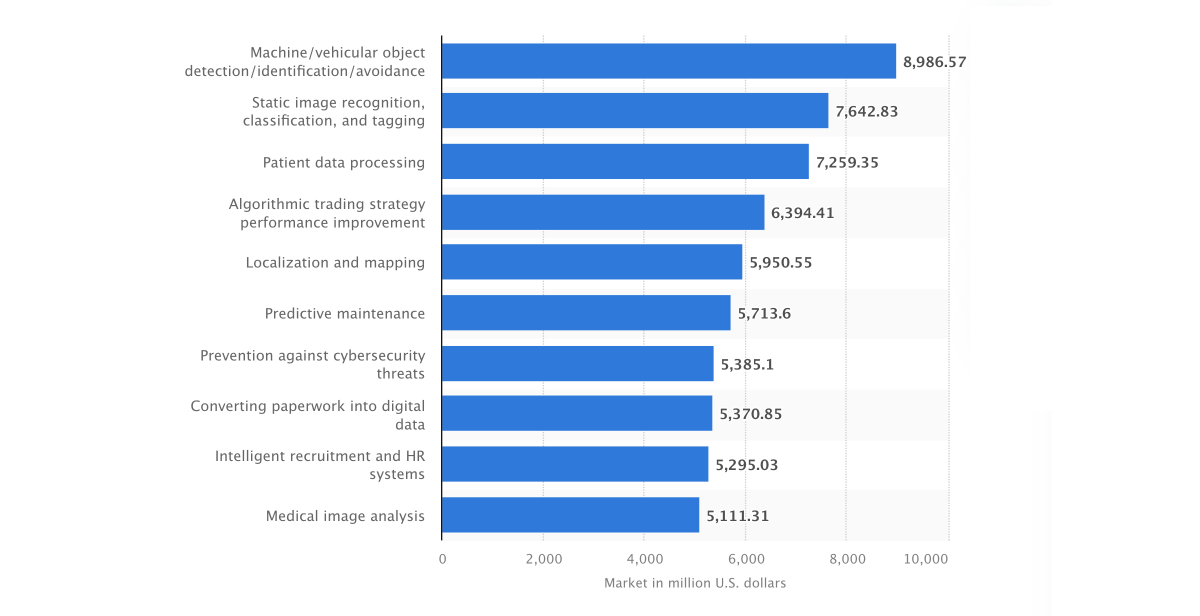

If we look at the most bankable AI areas, artificial neural networks lay the technical foundation for the majority of them. ANNs are great for predicting trends, as well as recognizing patterns in both images and audio files.

Neural networks are algorithms that are used in artificial intelligence. They are inspired by the human brain and can be used to process large amounts of information, such as voice inputs and photos, to better understand the content. Neural networks work best when they have many layers.

Neural networks vs artificial intelligence

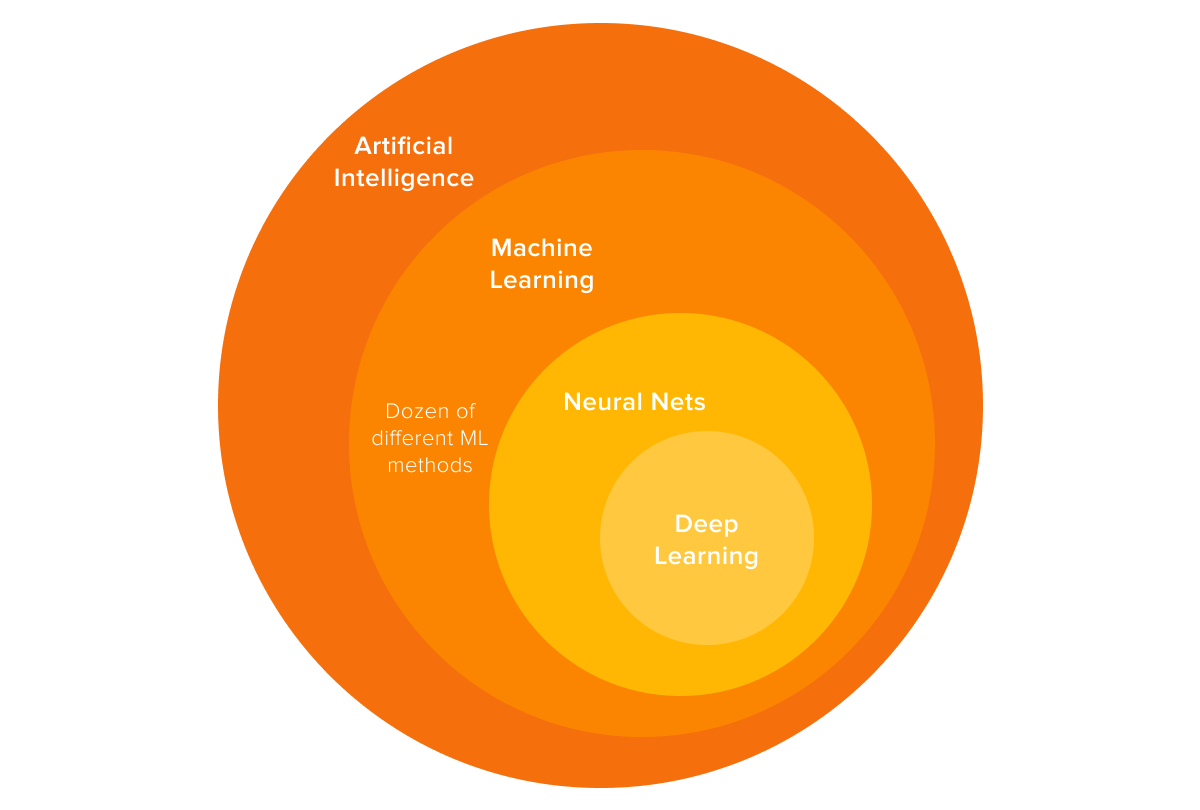

Machine learning and AI have been transforming the business over the last few years. Yet, most business owners aren’t aware of the differences between all AI-enabled applications. The correlation between AI, machine learning, and neural networks can be expressed through a hierarchical rank structure.

Thus, artificial intelligence is an umbrella term that encompasses a set of smart technologies, including ANNs. Neural networks, in turn, are a subfield of machine learning that lays the ground for deep learning and can process large amounts of data. Deep learning and neural nets are often used interchangeably.

As for machine learning, both ANN and ML models can predict or classify the output. The only difference is that a machine learning model makes decisions based on the training data. Therefore, both supervised and unsupervised ML models require human supervision. A neural network requires less human intervention at the initial stages and can produce accurate decisions with no manual effort.

From a technical standpoint, machine learning models feed on one data input layer. Neural network models, on the contrary, consist of multiple layers.

Overall, AI, machine learning, and neural networks are complementary technologies with each providing a foundation for the other. Thus, machine learning ideas often overlap with deep learning ones such as facial recognition and object detection.

How do ANN models work?

Now let’s see how any on-demand neural network development project works. ANN models are made up of neuron layers that are the core processing units of the network. We can divide all layers into three big groups:

- The input layer is responsible for passing raw data into the network;

- The hidden layers are where the computation takes place and then submit the result to the output layer;

- The output layer produces a result.

Thus, if we feed an image of the dog, the image gets divided into pixels. Each pixel is then fed into a separate neuron of the input layer. For example, if we want to recognize a 28×28 pixel picture, we need to have 784 neurons in the first layer of our network, according to the number of pixels in the picture.

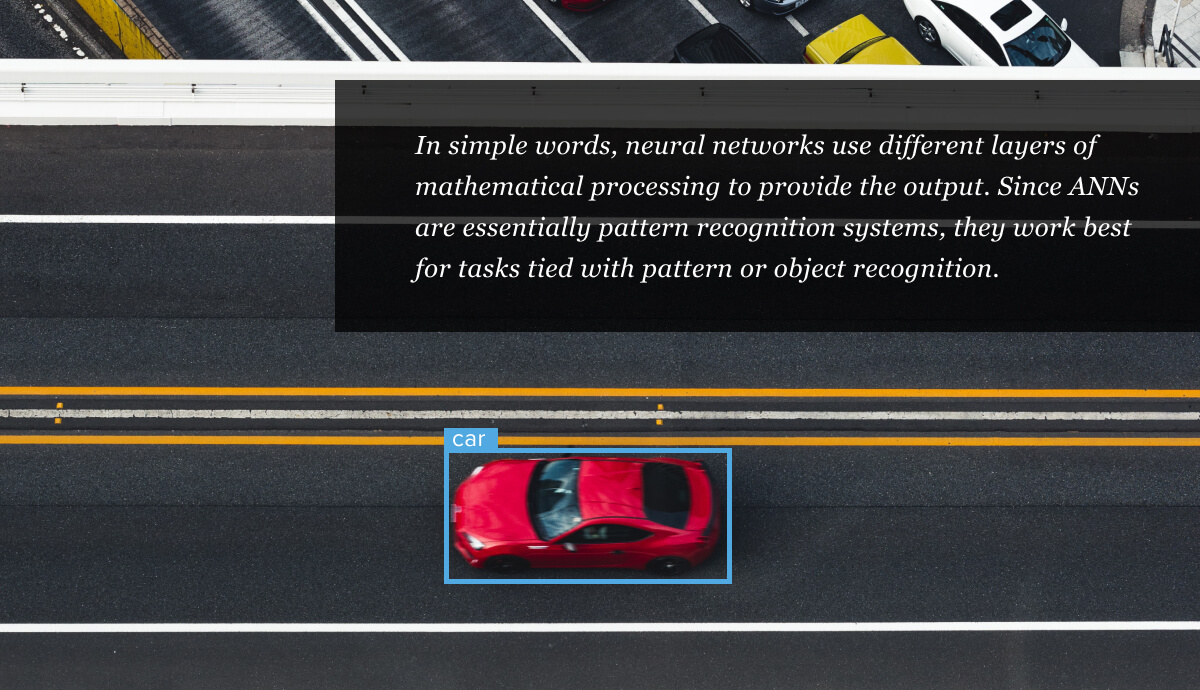

Source: Unsplash

Values from the input layer then go to the hidden layers, where specific mathematics takes place. The layer transforms the values and sends them to the output layer. The neuron in the output layer with the highest value is where the answer is calculated.

Most common types of neural networks

Applications of neural networks in artificial intelligence differ based on their type. The ecosystem of AI neural networks is now so burgeoning that the unaware need a map to navigate between versatile approaches. Let’s have a look at the most popular ones and how you can benefit from these types.

Artificial nets (ANNs)

Artificial neural networks are the type of technology that is most often cited in the AI revolution. These are the most textbook type of neuron structure that has a feedforward layout. It means that ANNs process inputs in the forward direction exclusively. Artificial nets are the first and simplest forms of quintessential deep learning models.

This type of net is typically employed for non-sequential data that isn’t tied to time characteristics and belongs to a supervised type of learning. Thus, ANNs work great for pattern mapping, association, and pattern classification. As for the input, this can be image data, text data, and tabular data.

Recurrent nets (RNNs)

The concept of recurrence sits on sequential or time-series data. The latter is a typical challenge for standard feedforward networks that cannot employ this input for learning and prediction. In traditional nets, the system implements a more chaotic approach with no sequences.

A recurrent system, however, is designed specifically to define data’s sequential characteristics and forecast the future scenario. This ability is possible thanks to the RNN’s memory which stores information about the previous value. The latter, in turn, defines the following one.

RNNs are often used for speech recognition and natural language processing, including:

When working with texts, RNNs can evaluate their grammatical and semantic structure and generate a similar narrative based on the results.

Convolution neural networks (CNN)

The convolutional neural network (also known as CNN or ConvNet) is one of the most popular algorithms in deep learning. This is a type of machine learning where a model learns to perform classification tasks directly on an image, video, text, or sound.

While this AI neural network example looks nearly identical to recurrent nets, there is a major difference. CNNs cannot process temporal information, while recurrent nets can effectively interpret sequential information. Nevertheless, CNNs are one of the most popular models since they can provide an internal representation of a two-dimensional image. This ability augurs particularly well for image analysis that includes position and scale characteristics.

Therefore, convolutional nets are the industry standard for any type of prediction task with image data. Unlike ANNs, they require much more input data to score a high accuracy rate.

Generative adversarial network (GAN)

GANs are a powerful type of network designed specifically for unsupervised learning. These can automatically identify and pick up the patterns in input data to generate or output new examples based on the original dataset.

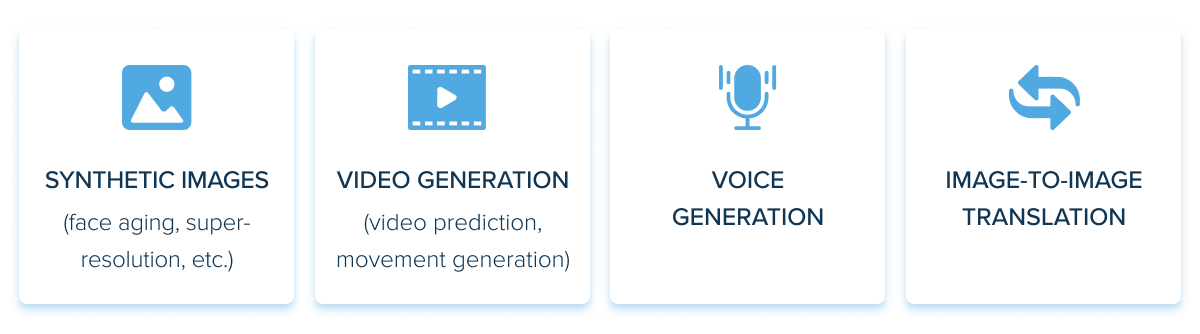

The potential of GANs is massive, as they can mimic any data regularities. Thus, generative nets can produce structures that are eerily similar to real-world creations, be it images, music, speech, or prose. In some sense, generative adversarial networks are machine artists that can be also used to predict risk and recovery in healthcare.

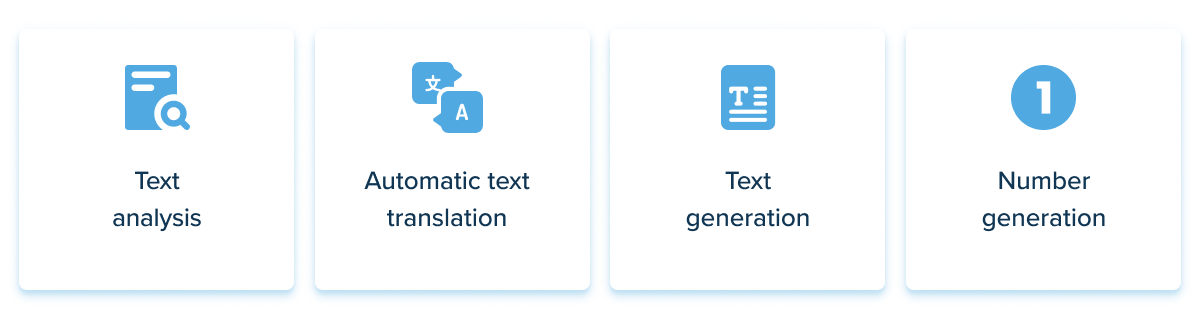

The most common applications of neural networks include:

Despite the versatility of architectures and approaches, neural nets aren’t the ultimate savior. Therefore, we recommend discussing the relevance of this AI subfield during AI strategy consulting or at the initial stages of your project. As a rule, the final choice will depend on the available data amount and problem complexity.

So when is the right time and place to leverage this technology? Let’s see.

When artificial neural network application is effective

Neural networks are the best at handling complex tasks that necessitate analytical calculations akin to the human brain. We’ve already scratched neural network use cases. Let’s dwell on these.

Classification

NNs help cluster and classify data by analyzing its parameters. They allow tech teams to group unlabeled data based on the similarities among the example inputs. Loan approval is a popular application of neural networks which predicts customers’ eligibility based on their age, credit history, and other parameters (provided the bank doesn’t explain the rejection reason).

Prediction

Thanks to hidden layers, neural networks perform better in predictive analytics. Along with linear regression models that analyze input and output nodes, neural networks also employ the hidden layer to improve prediction accuracy. For example, they can predict house prices based on a common feature such as total area, number of rooms, and others.

Source: Unsplash

Recognition

This is currently the widest application of neural networks with over $5 billion of market revenue. Their ability to recognize hidden patterns and correlations in raw data is responsible for their dominance on the recognition battlefield. Also, neural networks can perform multiple tasks in parallel without affecting the system performance.

When not to use neural networks

Sometimes data science services do not mandate the use of neural nets since some tasks are better off with other AI applications. You don’t need to invest in deep learning and NNs, when:

- Your task is not complex – it doesn’t involve unstructured data and does not relate to image classification, natural language processing, or speech recognition.

- Your datasets are small – neural networks achieve the highest accuracy with extremely large datasets and labeled data.

- You need interpretability of the algorithm – all deep learning models are hard or impossible to interpret, hence you can’t see why certain decisions or predictions are made.

- You are pressed for time – customized models take much time to develop and train.

If you are limited by any of the factors mentioned above, consider using classical machine learning as a foundation for your project.

Top real-life AI neural network examples

As you might guess, all applications of neural networks are data-dependent. Hence, the exhaustive majority of all projects are complex and involve lots of unstructured data. Here are some widely known applications of AI nets.

Weather prediction

Quantitative forecasts, including temperature, humidity, and rainfall, play a paramount role for agriculture, as well as traders within commodity markets. In 2021, Google has unveiled MetNet-2, which is a neural network that can predict the weather 12 hours ahead. The company claims that the algorithm can predict precipitation with an accuracy of up to one kilometer and up to two minutes in time.

This new model even outperforms the one used by the National Oceanic and Atmospheric Administration (NOAA). NOAA’s algorithms predict seven to eight hours and are less accurate. Neural nets account for the groundbreaking success of MetNet-2.

Traditionally, weather forecasts rely on physical models. The latter pose high computational requirements and are sensitive to physical approximations. And even if all the requirements are met, the forecastability of physical models spans only about 6 days. That is why weather forecasts required machine intelligence to bypass the limitations.

Source: Unsplash

Let’s see how neural networks generate weather forecasts. Radar stations and satellite networks send the input to the network. What’s important here is that the input data doesn’t need human verification.

The system takes in data on the presence and composition of clouds, including their speed of movement. The output of MetNet then generates a probability distribution with the most likely precipitation levels in various regions of the United States.

Thereby, RNNs present a better AI neural network example for predicting weather conditions thanks to their ability to structure nonlinear weather data.

Human face detection

Facial detection has long been one of the challenging fields of AI research. This task used to be performed with cascade classifiers (and still is), yet deep learning has revolutionized the field.

The original cascade classifier is fast to evaluate but comes short of discerning faces from different angles. CNNs, however, can detect faces in a wide range of orientations.

When building facial recognition systems, you have two options:

1.Use a solution with pre-trained models such as dlib, DeepFace, FaceNet, and others. This approach is less time-consuming since it already comes with face recognition functionality. You can also calibrate the ready-made models by resorting to machine learning consulting.

2.Build a facial recognition system from zero if you need multipurpose functionality. In this case, the datasets will be larger.

No matter the solution, facial recognition solutions follow a similar path. First, they map facial landmarks from a photograph or video. Then, they compare the results with a database to find a match. To achieve high recognition accuracy, the system is pre-trained on a large array of images, such as the MegaFace database. This is the main training method for face recognition.

Cancer detection

Intelligent medical imaging is among the most innovative applications of neural networks in artificial intelligence. For years, early cancer detection has been almost impossible due to the limitations of conventional screening methods. AI-based technologies now can detect small-sized abnormalities and save countless lives.

In 2019, NCI researchers have built a deep learning algorithm that can identify cervical precancers that should be removed or treated. Some AI algorithms have also proven effective to boost the identification of precancerous growths. Improved breast cancer risk models prevent malignant tumors and predict the risk of breast cancer.

Another trailblazing project belongs to researchers from New York University. They have presented an algorithm that detects lung cancer in its preventable stages. The neural network recognizes two of the most common types of lung cancer with 97% accuracy. This accuracy ratio is on par with the results of pathologists.

Overall, the global medical imaging market is projected to grow to $56.53 billion in 2028. The rapid development of technologies and COVID-19 impact will further advance deep learning and position it as a viable medical innovation.

Human pose estimation and activity recognition

Human pose estimation is a computer vision technique for estimating the 3D positions of human body parts from a single image. This information can be used to determine, for example, the posture or movements of a person. The method typically works by identifying key points on the body and then estimating the position of each point in 3D space. This data can be used to create animations or even full 3D models of people.

In contrast to traditional methods which use hand-crafted features or stylized models, modern pose estimation algorithms often use deep learning techniques to learn generic features from training data. This has led to significant improvements in performance, with state-of-the-art methods being able to reconstruct poses in real time from a single image.

Thus, in 2020, Adobe and Stanford revealed a new SOTA method for human pose estimation that outperforms all existing methods. The new approach, which uses a deep neural network, is able to estimate the positions of human body parts in 3D with unprecedented accuracy. It also generates more plausible motions than other methods.

This could have a number of important applications, such as helping people with disabilities or assisting in the development of better prosthetic devices.

Defect detection

In modern manufacturing, there is a constant push to produce products with fewer defects. Automated inspection systems are a key part of achieving this goal. Over the past few decades, there has been a major shift towards using artificial neural network applications for defect detection.

By applying neural networks, systems can automatically identify and isolate defects in products as they are being manufactured. This can be done in real-time, without the need for human intervention. The benefits of this technology include reduced manufacturing time and cost as well as improved product quality and safety.

Source: Unsplash

Airbus, which is a commercial aircraft manufacturer, uses a drone-based aircraft inspection system. The system relies on artificial intelligence and deep learning to accelerate and facilitate visual inspections.

Autonomous robots

Artificial neural network application in robotics might be a part of sci-fi reality, but it’s gaining traction in 2022. The global robotics market stood at around $27 billion in 2020 and is projected to reach $74.1 billion by 2026. However, robotics is intrinsically related to numerous limitations, including efficient power sources, reliable AI, and environment mapping.

Artificial neural network application in robotics allows developers to lighten these challenges. Thus, neural nets are often used in robotics for tasks such as navigation and recognition. They can be trained to recognize certain objects or patterns, which is particularly useful in applications where a robot needs to be able to navigate its environment and interact with a wide range of objects.

Additionally, neural networks can be used for tasks such as motion planning and trajectory optimization, allowing robots to move around more efficiently and navigate complex environments.

Starship robots are one of the many that see the world through neural networks. The latter helps delivery bots navigate around pedestrians, stay on the sidewalk, and cross the road safely. According to the company, deep learning along with sensors and radars enable obstacle detection, which wraps the robot with a ‘situational awareness bubble’.

The final word

Our world is inevitably heading to the automated future where smart systems will take on the majority of business tasks. Be it industrial robots or unmanned cars, neural nets bolster innovation and lay a tech foundation for most smart systems.

Neural networks are important for artificial intelligence because they can be trained to simulate the workings of the human brain. This makes them well-suited to tasks such as image recognition, voice recognition, and natural language processing. For companies, this technology means unmatched operational abilities, reduced costs, and new business horizons.

Build your own neural network with InData Labs

For more posts on AI and other technologies, please take a look at our blog.