Machine learning could become one of the most disruptive technologies for businesses, increasing the global GDP by 14% from now until 2030, yet many organizations fail to capitalize on it fully. New machine learning techniques can help reverse that trend.

Despite its already impressive impact on the business world, machine learning is still a young field. As a result, many of the practices people engage in with it aren’t optimal, and new, more promising use cases emerge regularly. Staying on top of these machine learning solutions can help companies take full advantage of these technologies.

With that in mind, here are 10 new machine learning techniques that businesses can apply across their operations.

Technical machine learning techniques

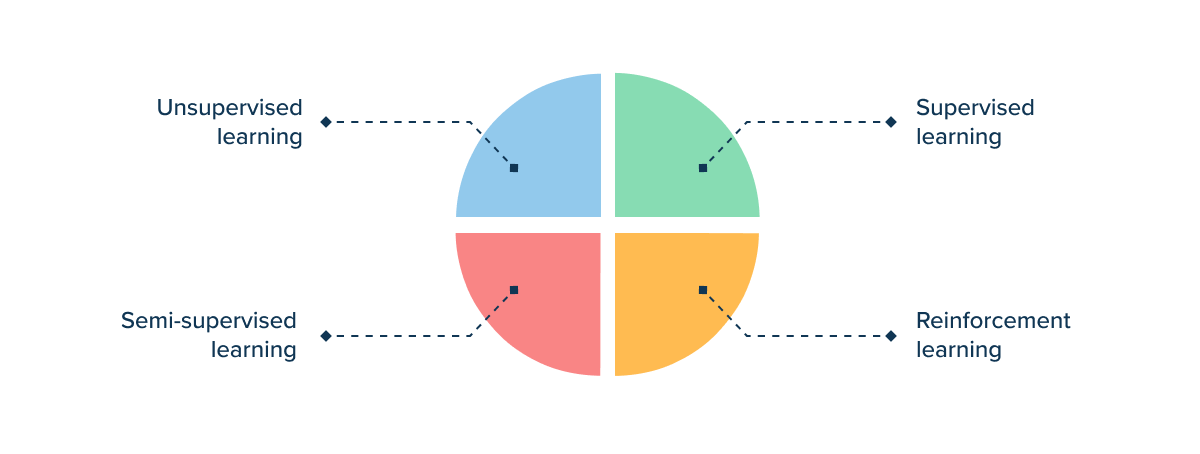

The first and most crucial group of machine learning techniques are technical ML methods and technologies. Overall, machine learning falls into four main categories:

These four techniques remain the foundation of modern machine learning, but companies have more than four options when creating and applying these models. These are broad umbrella categories covering a vast array of specific techniques, many of which have only emerged or become applicable in the past few years. Looking past these general labels is the first step toward more effective ML implementation.

Similarly, many innovations in machine learning technology have emerged recently. These offer new ways to approach historical ML issues, provide easier steps to implementation, or help reduce related costs. If businesses overlook these new methods, they won’t likely be able to use machine learning to its full potential.

With more than 90% of firms reporting ongoing investment in AI, these machine learning techniques will likely grow and advance in the coming years. Even if companies don’t adopt them right now, they deserve attention.

1. TinyML

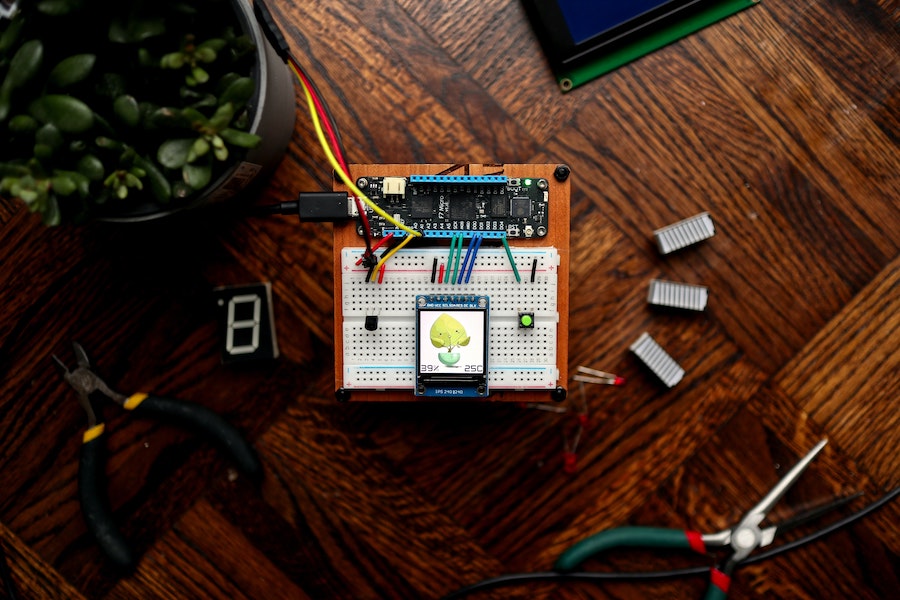

One of the most significant recent developments in machine learning techniques and algorithms is tinyML. As the name suggests, tinyML is machine learning on a much smaller scale. It uses quantization and delegation to make ML models smaller and lower-power, enabling them to run on microcontrollers instead of traditional processing units. TinyML also includes hardware innovations like more powerful microcontrollers and digital signal processors.

Smaller-scale machine learning applications are typically the most helpful for businesses. Narrower use cases make it easier for ML algorithms to produce reliable results. Since tinyML runs calculations entirely on local edge devices instead of a remote data center, it also reduces latency, consumes less power, and improves security.

Source: Unsplash

IoT-heavy businesses and industries stand to gain the most from tinyML. Manufacturers can use it to run predictive maintenance analysis on-device, reducing maintenance costs without extensive IT infrastructure. Healthcare organizations can use it to enable easier real-time health monitoring for remote patients.

Off-the-shelf tinyML frameworks are already available for use, too, such as Google’s TensorFlow Lite, which launched in 2019, letting developers deploy TensorFlow applications on mobile devices. Any company that could benefit from processing data closer to its source should look into these frameworks.

2. AutoML

Lack of expertise is one of the most significant barriers in data science applications. It’s tied with lack of quality data as the most common reason for failure in machine learning projects, with 34% of organizations reporting it. AutoML, or automated machine learning, aims to address these concerns by minimizing the need for experienced users.

AutoML platforms automate much of the machine learning process, including:

- Identifying relevant data sets

- Preparing that data

- Choosing the ideal algorithm to use

- Building a model based on that algorithm

- Training and revising the model

- Running the model in practice.

Traditionally, all of these processes would be manual, taking considerable time and requiring extensive machine learning knowledge. AutoML simplifies them by only requiring users to fill out templates to start and refine the process. They can then work with the model through a common coding language like Python or R. This reduces time and costs and the risk of human error.

Lenovo was able to increase its prediction accuracy from 80% to 87.5% and reduce model creation time from four weeks to three days after implementing autoML from DataRobot. Similarly, PayPal reduced model creation time to under two hours and achieved accuracy rates of 95% through H2O.ai’s autoML.

3. No-code machine learning

Similarly, no-code machine learning can also help businesses with minimal technical expertise capitalize on AI. While many modern tools enable companies to implement ML models with minimal coding, some teams lack coding experience entirely. With 73% of IT leaders facing difficulty filling tech positions, coding professionals are becoming an increasingly rare resource.

No-code machine learning mitigates that challenge by removing the need for coding experience. Like autoML, these platforms use extensive automation to streamline the machine learning process, but unlike autoML, they do so with a drag-and-drop interface. Users click buttons and ask questions in plain language to refine ML templates to meet their needs.

Source: Unsplash

Naturally, no-code machine learning methods can’t produce models as sophisticated as traditional methods can. However, it’s a promising alternative for companies that don’t need nuanced or highly advanced ML processes. Simple projects like dynamic pricing, profit projection, demand forecasting, and employee retention analysis are all suitable for no-code ML.

4. Few-shot learning

One of the first things every company learns about machine learning is the importance of quality data. Insufficient or low-quality data will produce unreliable results, so businesses spend $19.2 billion annually to acquire and manage data. Few-shot learning (FSL) techniques in machine learning disrupt this model, requiring far less data to produce reliable results.

FSL, also called low-shot learning (LSL), aims to train ML models with a minimal amount of data through meta-learning. It uses repeated challenges with a small number of examples to teach a model how to learn to solve a problem rather than teaching specifically to solve a problem. As a result, it can produce functional machine learning models without extensive data sets.

FSL’s lower requirements lead to substantial cost and time reductions, but it’s more limited than other types of machine learning. However, it’s sufficient for categorization tasks like computer vision, audio analysis, and natural language processing (NLP). Businesses relying on these tasks can gain the most from FSL.

Artificial intelligence translation can produce the equivalent of reducing the distance between countries by 35% or more by fostering positive relations. That alone is an impressive use case for the types of ML models FSL can produce.

5. Capsule networks

Most machine learning techniques and algorithms attempt to replicate the way humans learn and see the world. However, machines inherently approach some tasks differently than humans, so they need extra help to train cognitive computing models to mimic biological thought processes. Capsule networks (CapsNets) aim to provide that for classification algorithms.

CapsNets use structures called capsules instead of neurons like traditional neural networks, producing outputs in vectors. The network then weighs these vectors in a hierarchical, not necessarily linear, manner, improving recognition and classification. It can then balance both the probability and the pose of an observation, recognizing objects even when they’re in an unusual or incorrect order.

Source: Unsplash

The ability to recognize objects without needing them in a specific arrangement holds substantial promise for machine vision and NLP processes. More recent studies have proven their potential in other areas, too. One CapsNet was 92.2% accurate at identifying chemical compounds, which could aid faster, more effective drug discovery.

Companies working with inconsistent objects to recognize, like natural speech recognition or real-world objects outside of lab environments, are the most likely to benefit from CapsNets.

6. Self-supervised learning

The supervised versus unsupervised learning dynamic is one of the most common and easiest ways to organize ML models. Self-supervised learning models take a hybrid approach where the model trains itself on one part of the data to analyze the other parts more accurately. This emerging machine learning validation technique could lead to dramatic reductions in costs and time consumption.

Since self-supervised learning produces models that don’t need as much information, it’s ideal for more complex tasks. Understanding contextual nuances in natural language is one of the leading use cases. When Facebook employed this technique, its AI proactively detected 94.7% of hate speech that the company removed from the platform, up from 80.5% in the year prior.

Self-supervised learning could also aid robotic surgeries by estimating dimensions within the human body and improving computer vision. Similarly, it could lead to more accurate hazard recognition for self-driving technologies.

7. Physics-guided machine learning

Most machine learning approaches today fall into one of two categories: data-driven or theory-driven solutions. Data-driven models focus on producing results from hard data, which often results in specific answers but may lack contextual understanding. Theory-driven approaches focus on first-order principles before specific data, delivering consistent results but with a narrow scope.

Physics-guided models combine the two. These models use laws of physics to constrain their results and add real-world context into their calculation to produce more insightful and helpful analytics. They use inherent knowledge of real-world principles to fill in gaps and inform their answers, reducing the risk of results that make theoretical sense but don’t apply to the real world.

Source: Unsplash

As their name suggests, physics-guided models are best suited for answering real-world, physical questions. They’re less applicable to machine vision or NLP and more relevant for predicting weather conditions.

One recent study found that physics-guided models are up to 20% more accurate than other models at predicting temperature changes, even with sparse data. This real-world problem-solving is ideal for heavy industries like construction and logistics, as well as scientific research.

What are the different types of machine learning techniques?

These seven technical machine learning approaches can help companies find the best way to create the models they need. However, using the right kind of model or training approach is only part of the equation. Organizations must also apply their machine learning models correctly to achieve optimal performance.

Even a highly advanced, reliable model won’t benefit a company much without proper application. Improper use and training may produce lackluster results, take too much time, or cost too much money for these algorithms to be a worthwhile investment. Thankfully, as machine learning applications have risen, the best practices have become more evident.

These three emerging techniques show the most promise for applying machine learning effectively in real-world environments. While specific steps will vary depending on the use case and data at hand, these general approaches are ideal for many applications.

1. Use multiple basic machine learning techniques

When approaching a machine learning project, it’s easy to focus on finding one ideal technique to produce an optimal model. While businesses should consider this decision heavily, they don’t have to constrain themselves to a single approach. In many applications, especially with predictive models, it’s even better to combine basic machine learning techniques.

Every training technique has unique advantages and disadvantages. Understanding these is key to effectively applying the insights a model produces. It also means companies can minimize the vulnerabilities of each by using several approaches in conjunction. As a result, multi-model approaches are some of the best machine learning techniques for improved business analytics.

Source: Unsplash

Using multiple models will result in higher training costs and time, so it’s not ideal for every application. It’s best suited for issues where models must predict multiple outputs, so it’s one of the best machine learning techniques for sales forecasting. Since forecasting systems are vulnerable to anomalies, combining different techniques like linear regression, decision trees, and time series can help produce the most reliable results.

Netflix is perhaps the most famous example of multi-model machine learning’s potential. The streaming giant uses an ensemble model to optimize user recommendations based on multiple factors. Similar applications can improve machine learning in marketing for other companies.

2. Optimize data storage

Much of the focus in machine learning applications lies on the model and training method in question. However, as more companies have tried to implement these technologies, the need for optimized data storage has emerged. Without proper data management techniques, ML models will be unable to produce an impressive ROI.

ML models themselves often don’t take up much space, especially if they’re off-the-shelf solutions. ResNet-50, for example, is just over 100 MB, but training data sets that accompany it can contain tens of millions of images with a million annotations. If companies don’t have sufficiently large and agile storage for that data, their models will struggle to succeed in time-sensitive applications and could be costly.

Cloud storage is often ideal because it’s easily scalable. However, some applications may need the lower latency of on-premises solutions. Hybrid approaches can be valuable, but these must include architecture to intelligently distribute and scale workloads to keep latency low. Thankfully, as AI has become more popular, more vendors have started to offer flexible, ML-optimized storage solutions.

3. Use ML to label data before analysis

Finally, companies should address how they label data before training or analysis. This process is often one of the most time-consuming in machine learning operations, but companies can minimize it with more ML. Instead of labeling data manually, businesses should consider using an unsupervised model to automate that process.

Unsupervised techniques like clustering, anomaly detection, and association mining can fit data into common categories and uncover hidden structures. Importantly, they can also organize this information far faster than data scientists can, saving considerable time and money. Companies can then build and apply their models faster, achieving quicker ROIs.

Ready-made automated data labeling tools are available and work best with thousands of objects to improve their accuracy.

Machine learning is an evolving field

Machine learning is still relatively new, so new techniques and best practices emerge often. Businesses should stay on top of these changes to find the best ways to build and apply these models.

These 10 machine learning techniques represent some of the most promising approaches for ML applications today. Applying these can help any company make the most of this new technology and push ahead of the competition.

Author bio

April Miller is a senior writer with more than 3 years of experience writing on AI and ML topics.

Get started on a machine learning project with InData Labs

For more machine learning & other tech posts, please take a look at our blog.