The concept of computer vision was first introduced in the 1970s. The original ideas were exciting but the technology to bring them to life was just not there. Only in recent years did the world witness a significant leap in technology that has put computer vision on the priority list of many industries.

Since 2012, when the first significant breakthroughs in computer vision were made at the University of Toronto, the technology has been improving exponentially. Convolutional neural networks (CNNs) in particular have become the neural network of choice for many data scientists as it requires very little pre-programming compared to other image processing algorithms. In the last few years, CNNs have been successfully applied to identify faces, objects, and traffic signs as well as powering vision in robots and self-driving cars.

Greater access to images also contributed to the growing popularity of computer vision applications. Websites such as ImageNet make it possible to have almost instant access to images that can be used to train algorithms. And this is only the beginning. The worldwide library of images and videos is growing every day. According to an analysis from Morgan Stanley, 3 million images are shared online every day through Snapchat, Facebook, Facebook Messenger, Instagram, and WhatsApp, and most of them are owned by Facebook.

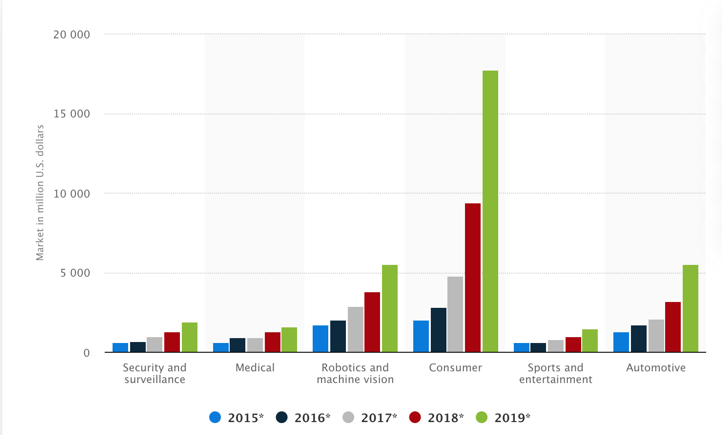

Computer vision artificial intelligence (AI) market revenues worldwide, from 2015 to 2019, by application

Source: Statista

The Future of Computer Vision. New Applications to Come

Computer vision is a booming industry that is being applied to many of our everyday products. E-commerce companies, like Asos, are adding visual search features to their websites to make the shopping experience smoother and more personalized.

Here are some computer vision examples.

Apple unveiled Face ID in 2017. In 2018, they announced its better version powered by neural networks. The third generation of Face ID came to the scene in 2019. Based on a powerful face recognition sensor, it’s become 30 % faster. Today, Face ID is used by millions of people for unlocking phones, making payments and accessing personal data. Moreover, Apple made it possible for users to better detect their faces in masks. The latest iPhone update iOS 13.5 streamlined the whole process. Now, users have a better chance of unlocking their phones with Face ID, or, if it fails, they’re asked to enter their PIN code.

Source: Unsplash

And more money is being invested in new ventures every year. AngelList, a U.S. based platform that connects startups and investors, lists 529 companies under the label of the technology. The average evaluation of these companies is at $5.2 M each. Many of these are in the process of raising between $5M and $10M in different stages of funding. It’s safe to say there is a lot of money being poured into technology development.

So, why are applications of computer vision gaining such popularity? Because of the potential benefits that can be reaped from replacing a human with a computer in certain areas of our lives.

As human beings, we use our eyes and brains to analyze our visual surroundings. This feels natural to us and we do it pretty well. A computer, on the other hand, cannot do that automatically. It needs computer vision algorithms and applications in order to learn what it’s “seeing”. It takes a lot of effort but once a computer learns how to do that, it can do it better than almost any human on earth.

This can make processes faster and simpler by replacing any visual activity. Unlike humans, who can get overwhelmed or biased, a computer can see many things at once, in high detail, and analyze without getting “tired”. The accuracy of computer analysis can bring tremendous time savings and quality improvements, and thereby free up resources that require human interaction. So far, this can only be applied to simple processes only but many industries are successfully pushing the limits of what the technology can do.

Computer Vision Applications in Different Industries

Application of computer vision technology is very versatile and can be adapted to many industries in very different ways. Some use cases happen behind the scenes, while others are more visible. Most likely, you have already used products or services enhanced by the innovation.

Automotive

Some of the most famous applications of computer vision have been done by Tesla with their Autopilot function. The automaker launched its driver-assistance system back in 2014 with only a few features, such as lane centering and self-parking, but it’s set to accomplish fully self-driving cars sometime in 2018.

Features like Tesla’s Autopilot are possible thanks to startups such as Mighty AI. It offers a platform to generate accurate and diverse annotations on the datasets to train, validate, and test algorithms related to autonomous vehicles.

Source: Unsplash

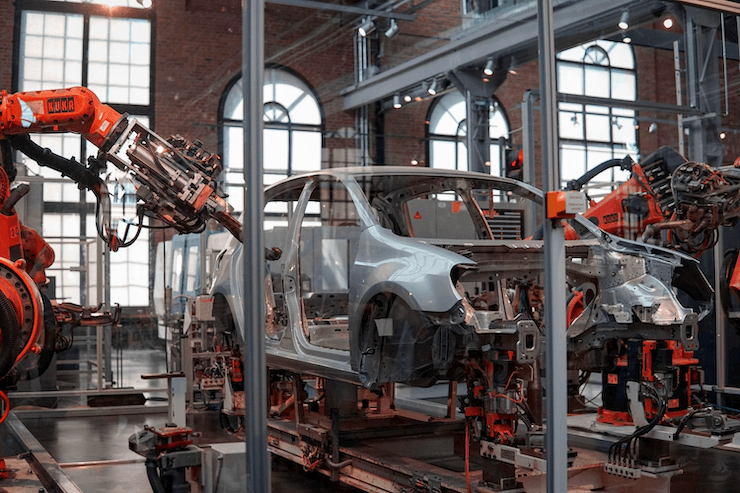

Manufacturing

Computer vision coupled with sensors can work wonders for critical equipment. Today, the technology is being used to check on important plants or equipment in there. Infrastructure faults and problems can be prevented with the help of computer vision that is wise enough to estimate its health and efficiency. Many companies are syncing predictive maintenance with their infrastructure to keep their tools in good shape. For example, ZDT software made by FANUC is a preventive maintenance software designed to collect images from camera attached to robots. Then this data gets processed to provide trouble diagnosis and detect any potential problems.

Source: Unsplash

Retail

The innovation has made a splash in the retail industry as well.

Walmart is using computer vision to track checkout theft and prevent shrink in 1,000 stores across the country. They’ve rolled out a Missed Scan Detection program that uses cameras to detect scan errors and failures in no time. Once an error is detected, the technology informs checkout managers so they can address it. This initiative helps reduce ‘shrinkage’ that combines theft, scan errors and fraud. For now, the program has proved effective in digitizing checkout surveillance and preventing losses.

Source: Unsplash

A startup called Mashgin is working on a solution similar to Amazon Go. The company is working on a self-checkout kiosk that uses computer vision, 3D reconstruction, and deep learning to scan several items at the same time without the need of barcodes. The product claims to reduce check out time by up to 10x. Their main customers are cafeterias and dining halls operated by Compass Group.

Financial Services

Although the technology has not yet proved to be disruptive in the world of insurance and banking, a few big players have implemented it in the onboarding of new customers.

The Bank of America is no stranger to AI. They’re big fans of data analytics and are using it for effective fraud management. Slowly but surely they’re adopting computer vision. They’re applying it to resolve billing disputes. Analyzing dispute data, the technology is quick to deliver a verdict and save the employees’ time. Caixabank is also welcoming computer vision. In 2019, they allowed their clients to withdraw money via ATMs using face recognition. The ATM can recognize 16,000 facial points on an image to verify the identity of a person.

Healthcare

In healthcare, computer vision has the potential to bring in some real value. While computers won’t completely replace healthcare personnel, there is a good possibility to complement routine diagnostics that require a lot of time and expertise of human physicians but don’t contribute significantly to the final diagnosis. This way computers serve as a helping tool for the healthcare personnel.

For example, Gauss Surgical is producing a real-time blood monitor that solves the problem of inaccurate blood loss measurement during injuries and surgeries. The monitor comes with a simple app that uses an algorithm that analyses pictures of surgical sponges to accurately predict how much blood was lost during a surgery. This technology can save around $10 billion in unnecessary blood transfusions every year.

One of the main challenges the healthcare system is experiencing is the amount of data that is being produced by patients. It’s estimated that healthcare related data is tripled every year. Today, we as patients rely on the knowledge bank of medical personnel to analyze all that data and produce a correct diagnosis. This can be difficult at times.

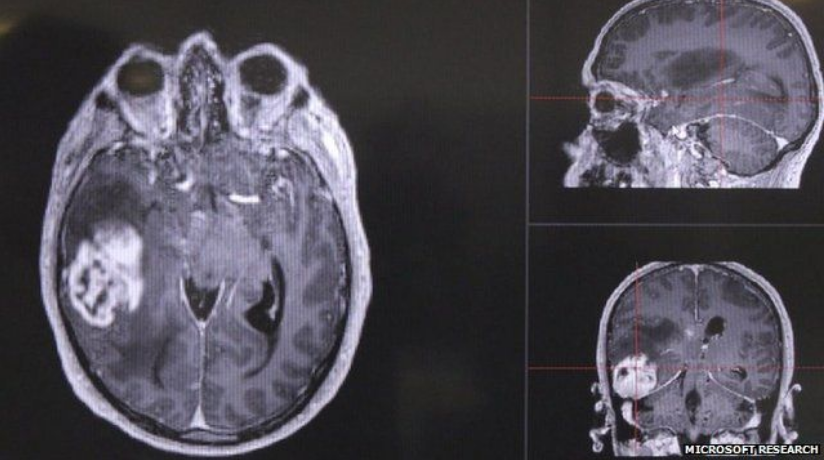

Microsoft’s project InnerEye is working on solving parts of that problem by developing a tool that uses AI to analyze three-dimensional radiological images. The technology potentially can make the process 40 times quicker and suggest the most effective treatments.

Source: Microsoft

Agriculture

Agriculture has always been deeply steeped in tradition. Computer vision is here to change that. What exactly can the technology bring to the table? It can offer a helping hand in mapping, analyzing soil, counting livestock, evaluating crop yield and its ripeness and more. RSIP vision developed plenty of agriculture solutions. Using deep learning, sensory and satellite imagery they can estimate seasonal yield before harvesting. They made it possible for farmers to make yield estimation using their smartphones or tablets. One Soil Platform streamlines farming. They develop solutions that help collect field data and monitor plants. More importantly, the technology can help perform routine and time-consuming tasks like planting, harvesting and evaluating plant health and development. All rolled into one, it does help farmers streamline their work.

Source: Unsplash

Surveillance

The innovation enables security of public places like parking lots, the subway, railways and bus stations, roads and highways, etc. The application of computer vision for security purposes is diverse. It’s face recognition, crowd detection, human abnormal behavior detection, illegal parking detection, speeding vehicle detection and more. The technology helps strengthen security and prevent accidents of various kinds. Racetrack unveiled surveillance solutions that detect abnormal activities and inform managers to intervene.

Source: Unsplash

Challenges of Applied Computer Vision

As illustrated above, the technology has come a long way in terms of what it can do for different industries. However, this field is still relatively young and prone to challenges.

Not Accurate Enough for the Real World

One major aspect that seems to be the background for most of the challenges is the fact that the technology is still not comparable to the human visual system, which is what it essentially tries to imitate.

Computer vision algorithms can be quite brittle. A computer can only perform tasks it was trained to execute, and falls short when introduced to new tasks that require a different set of data. For example, teaching a computer what a concept is hard but it is necessary in order for it to learn by itself.

A good example is the concept of a book. As kids, we know what a book is, and after a while can distinguish between a book, a magazine or a comic while understanding that they belong to the same overall category of items.

For a computer, that learning is much more difficult. The problem is escalated further when we add ebooks and audiobooks to the equation. As humans, we understand that all those items fall under the same concept of a book, while for a computer the parameters of a book and an audiobook are too different to be put into the same groups of items.

In order to overcome such obstacles and function optimally, computer vision algorithms today require human involvement. Data scientists need to choose the right architecture for the input data type so that the network can automatically learn features. An architecture that is not optimal might produce results that have no value for the project. In some cases, an output of an algorithm can be enhanced with other types of data, such as audio and text, in order to produce highly accurate results.

In other words, the technology still lacks the high level of accuracy that is required to function efficiently in the real, diverse world. As the development of this technology is still in progress, much tolerance for mistakes is required from the data science teams working on it.

Lack of High-Quality Data

Neural networks used for computer vision applications are easier to train than ever before but that requires a lot of high-quality data. This means that the algorithms need a lot of data that is specifically related to the project in order to produce good results. Despite the fact that images are available online in bigger quantities than ever, the solution to many real-world problems calls for high-quality labeled training data. That can get rather expensive because the labeling has to be done by a human being.

Let’s take the example of Microsoft’s project InnerEye. The tool utilizes computer vision to analyze radiological images. The algorithm behind this most likely requires well-annotated images where different physical anomalies of the human body are clearly labeled. Such work needs to be done by a radiologist with experience and a trained eye.

According to Glassdoor, an average base salary for a radiologist is $290.000 a year, or just short of $200 an hour. Given that around 4-5 images can be analyzed per hours, and an adequate data set could contain thousands of them, proper labeling of images can get very expensive.

In order to combat this issue, data scientists sometimes use pre-trained neural networks that were originally trained on millions of pictures as a base model. In the absence of good data, it’s an adequate way to get better results. However, the algorithms can learn about new objects only by “looking” at the real-world data.

Key Takeaways

Now that the technology has finally caught up the original ideas of computer vision pioneers from the 70s, we are seeing this technology being implemented in many different industries. Both big players, like Facebook, Tesla, and Microsoft, as well as small startups, are finding new ways how computer vision software can make banking, driving, and healthcare better.

The main benefit of the technology is the high accuracy with which it can replace human vision if trained correctly. There are a number of processes that today are done by people that can be replaced by artificial intelligence applications and eliminate mistakes due to tiredness, save time and cut costs significantly.

As great as computer vision algorithms are today, they still suffer from some big challenges. The first is lack of well-annotated images to train the algorithms to perform optimally, and the second being lack of accuracy when applied to real-world images different from the ones from the training dataset.

Work with InData Labs on Your Breakthrough Computer Vision App

Have a project in mind but need some help implementing it? Schedule an intro consultation with our deep learning engineers to explore your idea and find out if we can help.