Cognitive Computing and Artificial Intelligence [AI] are often considered the same term and used interchangeably by those who do not work in the technology industry. But questions regarding Cognitive Computing vs. AI have arisen in the past, and now they cannot be ignored.

That is not a surprise since both terminologies imply that computers are responsible for performing job functions that humans do. They both have a set place in our everyday lives. However, in reality, we are not aware of what Cognitive Computing and AI mean. Is Cognitive Computing AI? No, not at all. This article not only dives deep into the two concepts but also looks at the difference between Cognitive Computing and Artificial Intelligence. Let us begin.

What is Cognitive Computing?

Cognitive Computing is the use of computerized models that imitate humans’ thought processes for solving complex situations with uncertain answers.

Based on the idea of self-learning systems, they use machine-learning techniques to carry out human-like tasks in a quick-witted manner. IBM defines the concept as a system which learns at scale, reasons with a purpose, and interacts with humans naturally.

Moreover, the concept takes various algorithms such as pattern recognition, data mining, and natural language processing so that computers can accurately copy how the human brain functions and deliver the output.

Source: Unsplash

Features

Why is Cognitive Computing critical? The technology uses machine learning models to simulate the way the brain operates. Here are four traits that make it so important in this day and age:

#1. Faster Adoption

Cognitive Computing applications must be flexible enough to adapt and learn changing information as per surroundings. They should be dynamic when it comes to data-gathering in real-time and understand goals just like a human brain does. Some examples include data collection and understanding priorities.

#2. Level of Interaction

To understand the needs and demands of the users, cognitive systems must interact adequately. Similarly, the system should also interact with machines such as processors and cloud services for improved decision-making.

By deploying natural language processing, deep learning, and machine learning functionalities, it can understand human feedback and provide meaningful outcomes. For example, professional intelligent chatbots such as Mitsuku used Cognitive Computing.

#3. Iteration and Statefulness

A Cognitive Computing system must identify problems by asking more questions and pulling data if the mentioned problem is incomplete. It should “remember” previous interactions and return information appropriate at that point in time for the current application. By asking questions or seeking an additional source, it should identify the problem.

#4. Contextuality

Cognitive Computing systems can understand, define and extract contextual elements such as syntax, time, place, appropriate domain, regulations, user profile, method, and purpose easily. They rely on different data sources, including structured and unstructured digital data and sensory inputs.

Limitations

Today, there are certain limitations in Cognitive Computing applications that one should consider. The major ones are listed below.

#1. Steep Learning Curve

Cognitive systems require long development and training cycles. They also need extensive and detailed training data to understand the process and improve it. The long training process of cognitive systems is the main reason for their slow adoption.

The complexity and costly process make the condition more intense. In addition, there are serious legal and privacy implications due to personal data like emails, search queries and downloads. This type of data should never be disclosed. So, cognitive systems should find a way to protect this sensitive data and prevent breaches.

#2. Limited Risk Analysis

Cognitive systems fail to analyze the risks associated with unstructured data, including socioeconomic factors or political aspects. Therefore, humans need to involve themselves for complete and better risk analysis and final decision making.

A predictive model, for example, discovers a location for oil discovery. But the cognitive model should take this aspect into account if the country is experiencing a change in government. Thus, for full risk analysis and final decision making, human involvement is required.

#3. Intelligent Augmentation

Existing Cognitive Computing technologies are limited in terms of engagement and decision. Such systems are more successful as assistants. They are more like intelligence augmentation, not AI. They supplement human reasoning and interpretation but rely on humans for important decisions. However, the problem’s complexity grows when the number of data sources also increases, and it becomes challenging to aggregate, integrate, and analyze unstructured data.

Therefore, there is a need to have stronger business rules to guide the algorithms and large data volumes to train the machines.

Cognitive Computing: Examples

It has carved a niche for itself in analysis-intensive domains like finance, healthcare, and marketing. Welltok has a cognitive-powered platform called Concierge that processes massive quantities of information for health insurers and medical care providers and makes smart, personalized healthcare recommendations.

Source: Unsplash

Similarly, LifeLearn is an AI-powered assistant that enables clinicians to ask questions anywhere at any time on desktop and mobile devices, ensuring that even field veterinarians can access information, including those serving large animals on rural farms.

BrightMinded has developed a TRAIN ME app that helps sports coaches manage their business. The app analyzes the user data to discover what’s best for them. Based on data analysis, the user gets a personalized workout plan.

What Do You Know About Artificial Intelligence?

AI is a branch of computer science capable of making decisions and performing tasks that typically require human intelligence. In simple words, AI refers to human-like intelligence demonstrated by computers, robots, and software machines.

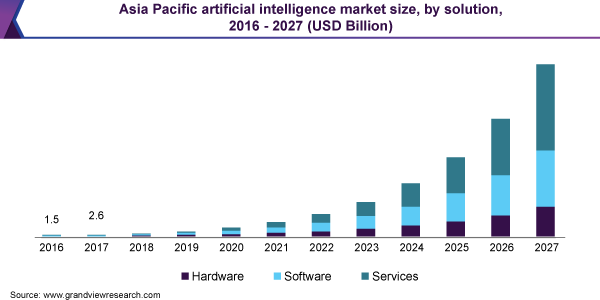

The global artificial intelligence market size was valued at USD 39.9 billion in 2019 and is expected to grow at a compound annual growth rate (CAGR) of 42.2% from 2020 to 2027.

Source: grandviewresearch.com

And that’s only the beginning. AI’s paved its way into our lives, and it’s here to stay.

The development of an AI-powered system or an app is complex. Robust AI systems or platforms are only possible if the training and optimization process uses relevant data for the given tasks. These applications should be trained from time-to-time with advanced data extraction for accurate results.

Features

In recent years, it has become clear Artificial Intelligence for research and development shows no signs of slowing down as various domains increasingly deploy the technology. Every AI-based Cognitive Computing application is based on data. The more one provides data, the better they will obtain the results. Here is why AI is essential:

#1. Automated Repetitive Tasks

Machines based on AI can efficiently perform repetitive work and save a lot of time and effort for humans. Moreover, automation can help users simplify tedious tasks. In addition to the increased efficiency, AI also reduces overhead costs and provides a safer work environment.

#2. Data Ingestion

Data ingestion is an essential feature of AI that deals with vast amounts of data. AI systems can store tons of information about multiple entities from various sources. These datasets are updated and are next-to-impossible for regular systems to ingest.

Therefore, AI-enabled systems efficiently collect and analyze data that can be used for quality. Imagine the amount of information that a small business of 50 employees has or one with 100,000 employees. AI helps evaluate large volumes of data conveniently.

#3. Future-Readiness

AI is responsible for shaping every industry and has acted as the main driver of emerging technologies such as big data, robotics, and IoT. These technologies observe and react as per their surroundings. They also consider the situations that might come up in the future based on the actions taken.

Since AI imitates the way a human mind thinks and solves problems, it often successfully analyzes the environment and behaves appropriately — similar to how humans study their environment, draw inferences, and then act accordingly.

#4. ADA Compliance: Image Recognition on Websites

According to the World Bank, 15% of the world’s population lives with some form of disability. Having accessible websites has become a necessity.

Today, AI supplements a feature like automatic alternative text generation using OCR, which describes images to people with visual impairment. Powered by neural networks and machine learning, it can help describe every image accurately.

It is predicted that in the next 5 to 7 years, AI-powered image recognition will become so powerful that the thought of manually writing alt text for images will become futile. AI will handle this task on its own.

#5. Environmental Catastrophes Prevention

Many governments worldwide have deployed AI for effective disaster management. With data on thousands of past disasters, AI systems can reliably forecast the future relating to the disasters that could occur.

Specifically, AI can predict floods, earthquakes, volcanic eruptions, and hurricanes. To predict that, AI uses historical data and other factors. For example, for an earthquake prediction, seismic data needed. AI studies earthquakes and its aftermath to make judgements.

#6. Chatbots and Face Recognition

Facial recognition systems are emerging as a powerful security system in modern devices. Based on AI, such tools allow individuals to authorize access by verifying their faces.

Chatbots are increasingly being used by all medium and large-sized companies. Such apps often serve as the first point of contact with potential customers and solve their issues via auditory methods or texting. They efficiently reply based on human behaviors [and replies].

Limitations

Even though AI has plenty of pros, it is not free from cons. Here are the top three.

#1. Lack of Creativity

AI is brilliant, but it can never match human creativity. It still lacks the foundations for spontaneous and creative thought processes. Imagine representing things as concepts rather than just pure data — AI is not capable of that.

While AI systems can help determine color preferences or a product’s pricing, humans can generate ideas in a truly creative fashion.

#2. High Maintenance Levels

AI-based software solutions require regular updates and data feeding along with extensive training sessions to drive quality results.

This helps them adapt to the continually changing business environments. However, the learning curve is steep and takes a lot of human effort. Also, AI models are expensive, and companies should consider the ROI before integrating AI.

#3. Shortage of Strategic Solutions

Due to the lack of an expert talent pool and absence of buy-in from management, AI activities are often not designed at a strategic level. This considerably waters down the role of Artificial Intelligence in business as the technology adds little to fulfill long-term objectives.

AI Examples

The technology is more critical in service-oriented industries such as manufacturing, healthcare, and CX domains. Let us dive deep into the use cases:

- Thanks to AI, classrooms are changing. The technology provides a collaborative solution for teachers to personalize lessons to meet the unique needs of a student.

- AI in healthcare can reduce prescription dose mishaps, decrease payment theft and facilitate human involvement in clinical trials to save lives.

- Artificial Intelligence can also help disadvantaged or underserved communities make better credit decisions with machine learning at the center of increasingly sophisticated models that can reduce financial risks in the future.

Endnotes

To sum it up, there is a difference between Artificial Intelligence and Cognitive Computing. Although the underlying purpose of AI and Cognitive Computing is to simplify tasks, the difference lies in the way they are approached.

AI is used to augment human thinking and solving complicated problems. It concentrates more on providing accurate results. Simultaneously, Cognitive Computing aims to imitate human behavior, aiming to solve complex issues similar to humans solving problems.

Author Bio

Lucy Manole is a creative content writer and strategist at Marketing Digest. She specializes in writing about digital marketing, technology, entrepreneurship, and education. When she is not writing or editing, she spends time reading books, cooking and traveling.

Develop AI-Powered Solutions with InData Labs

Schedule a call with our AI experts to see how we can help you implement your idea into business.