Companies looking to stay ahead of the curve are increasingly engaging in AI exploration. They are experimenting with various AI models, assessing their potential impact on business and people. However, this exploration comes with challenges, particularly in the realm of AI and data privacy. As AI models rely on large datasets, often containing sensitive or personal information, businesses must carefully navigate data privacy regulations and privacy-preserving techniques.

Cisco Data privacy benchmark study reveals that over 90% of respondents believe that generative AI demands new strategies for managing data and mitigating risks. This underscores the critical need for thoughtful governance. In this article, we explore how businesses can implement effective governance frameworks to address Generative AI data privacy.

Why data management matters

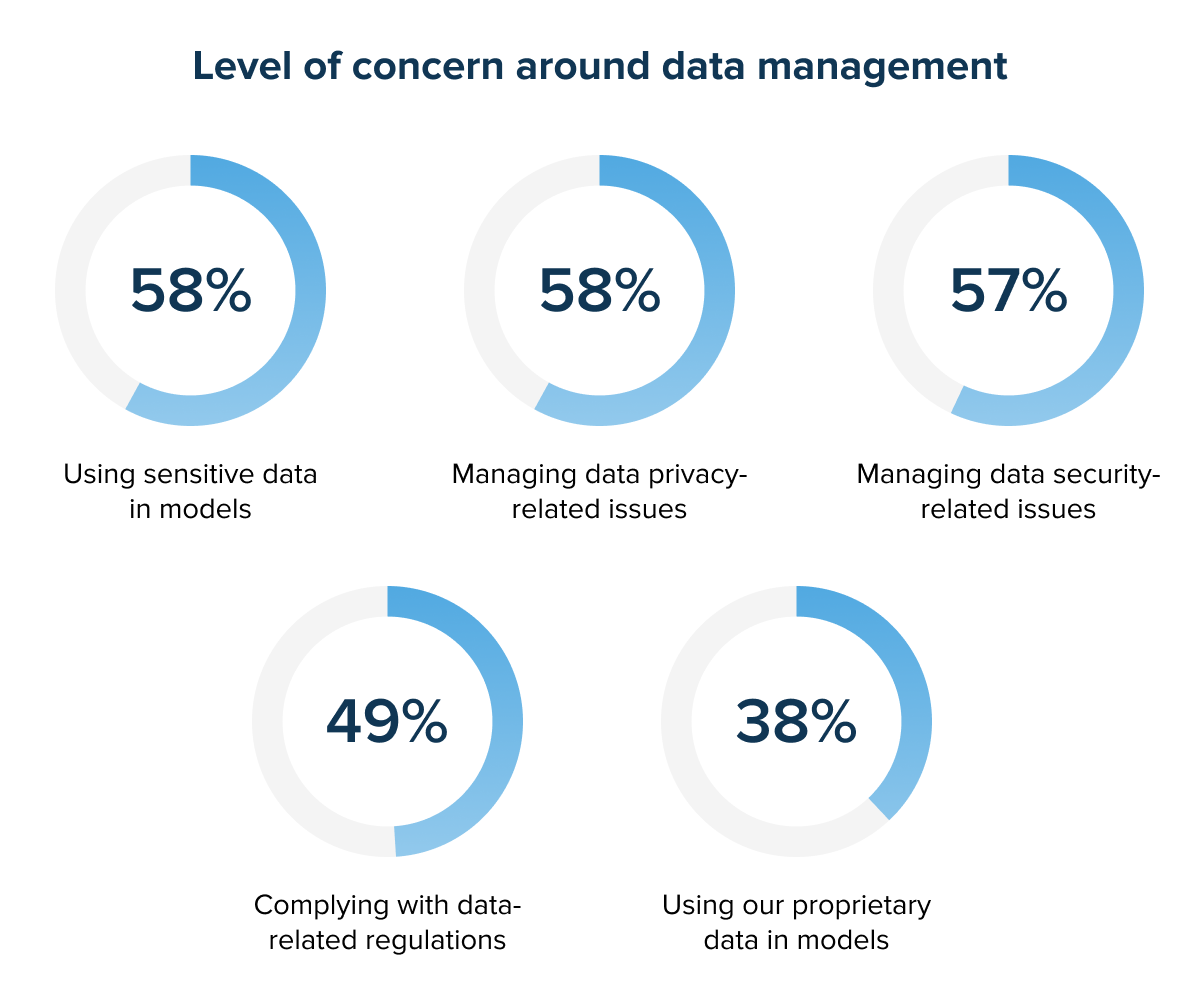

Data is the core of AI applications. Without high-quality and well-managed data, AI models cannot function effectively. At this point, many businesses are facing challenges meeting these requirements. A recent Deloitte survey shows that companies have some ongoing concerns about data that hinder the implementation and scaling of GenAI solutions. The infographic below illustrates that, highlighting the most common data-related obstacles.

Generative AI places specific demands on data architecture and management. Experts consistently emphasize four key principles: quality, privacy, security, and transparency. These pillars form the backbone of efficient data management and ensure that models are powerful, scalable but also ethical, and compliant with legal standards.

- Quality: High-quality data is accurate, relevant, and representative of real-world scenarios. Effective data management helps businesses avoid errors and biases, enabling the development of reliable AI models. Continuous monitoring and updates are essential to ensure data remains relevant and maintains its effectiveness over time.

- Privacy: Data management must comply with privacy regulations like GDPR and CCPA, which dictate how data is collected, processed, and used. Adhering to these laws fosters trust and reduces the risk of privacy breaches, ensuring data is handled in a responsible and transparent manner.

- Security: Protecting sensitive information in AI systems is crucial. Implementing advanced security protocols, such as encryption and access control, safeguards data and limits access to authorized personnel only. AI models should be protected from potential threats that could compromise their integrity or outputs.

- Transparency: Businesses must be able to clearly explain how their AI models process data and make decisions. It’s especially important when AI-driven decisions impact individuals or businesses. Transparency fosters trust and accountability ensuring responsible and ethical AI deployment.

In addition to these core principles for AI governance, businesses can turn to AI TRiSM (Trust, Risk, and Security Management). This holistic approach integrates critical elements such as risk management, trust-building, and comprehensive security practices throughout the entire AI lifecycle. It runs like a red thread through data collection and input, model training, deployment, and continuous monitoring. All for the sake of responsible Generative AI development, where models are not only powerful but also aligned with regulatory and organizational values.

AI TRiSM focuses on minimizing a variety of risks that can significantly impact the performance and ethical integrity of AI systems. Moreover, it plays a crucial role in enhancing trust by prioritizing transparency. It provides all stakeholders, such as customers, regulators, and teams, with a clear understanding of how AI models use data, make decisions, and generate outputs. In this way, businesses can navigate the complexities of AI ethics and compliance and responsibly deploy and maintain Generative AI applications.

Practical solutions for AI data privacy issues

AI for business presents significant opportunities to improve operations, from optimizing customer interactions to refining decision-making processes. However, these advancements bring additional complexity when it comes to managing sensitive data.

Machine learning models rely on large datasets for training, which can contain both internal and external data. Internal data may include sensitive information about employees, financial records, and proprietary business operations. External data can encompass a wide variety of sources, such as customer information, third-party data from suppliers, social media activity, or even public datasets. Meanwhile, AI and data privacy concerns arise when dealing with all sensitive information.

Mitigating AI data privacy issues requires a comprehensive strategy at all stages. One of the key approaches is to anonymize data by removing or altering personal identifiers from datasets. This minimizes the risk of identifying individuals and ensures that even if a breach occurs, the data remains unusable.

Another essential method is end-to-end encryption, which ensures that data is protected at every point of its lifecycle. Encryption transforms sensitive data into unreadable formats and is combined with role-based access control. Moreover, conducting regular security audits and vulnerability assessments is critical to identifying potential weaknesses in AI systems and data handling processes.

When it comes to machine learning development, addressing AI data privacy is particularly critical. Businesses must ensure that sensitive data used in training machine learning models is properly anonymized and protected throughout the entire process.

According to a Cisco Consumer Privacy Survey, 48% of respondents believe that AI can enhance their lives in various ways, like in shopping, streaming services, and healthcare. However, a significant 62% of consumers voiced concerns about how organizations are handling their personal data in AI applications. This highlights a growing need for transparency and trust between businesses and consumers regarding data usage.

From the customer perspective, organizations must implement clear protocols for data retention and obtain explicit user consent to ensure compliance with evolving privacy regulations. By doing so, companies can not only meet legal obligations but also build stronger relationships with their customers, fostering trust in AI-driven services.

Let’s look at this issue in practical cases. AI chatbots that have gained popularity, are an object of data privacy concerns. During AI chatbot development, special attention must be paid to how sensitive customer information, such as contact details, account information, and personal preferences, is handled. They interact with this data, making it critical to implement strict privacy controls.

Any breach or misuse of chatbot-collected data can lead to significant security issues. Their architecture should be based on privacy-by-design principles, incorporating secure data storage, data encryption, and anonymization.

Source: Unsplash

AI surveillance and security play increasingly prominent roles in modern data privacy discussions due to the widespread deployment of AI systems in monitoring and protecting assets, facilities, and people.

AI surveillance refers to the tools that observe, track, and analyze individuals’ activities in both physical spaces and digital environments. For example, facial recognition, automated license plate readers, or analyzing online behavior through browsing history, social media, and communication patterns. The mass collection of personal data, such as biometrics and location history, without explicit consent, is a key issue.

Data breaches could result in identity theft or unauthorized profiling. Security measures like encryption and secure storage must be in place to safeguard this sensitive information. In addition, these AI models need safeguards against manipulation and bias. Ethical guidelines and regulations are necessary to prevent misuse, such as unlawful surveillance or profit-driven privacy violations.

Best practices for AI model security

While Generative AI benefits are evident in enhancing efficiency and innovation, businesses must approach its implementation thoughtfully. Large language model (LLM) development presents unique challenges due to the vast amounts of data involved and the diverse range of tasks these models perform.

Both input and output are subject to regulations. Input data for AI models must comply with regulations regarding privacy and consent, especially when dealing with personal or sensitive information. And as we have already stated, data quality is crucial. Security is again a concern. For instance, attackers may try to damage the input data, which will compromise the integrity of the model.

On the output side, AI-generated content can unintentionally reveal sensitive or personal information, leading to privacy violations. AI outputs must be transparent and explainable to comply with accountability regulations. Outputs can also reflect biases from input data, resulting in discriminatory decisions. If not carefully monitored, AI-generated content can be harmful or misleading, posing ethical and legal challenges.

The responsible use of GenAI-based applications requires companies to develop and integrate new processes to ensure safe and ethical implementation. For example, Deloitte surveyed respondents on the specific steps their organizations are taking. The top three actions identified were establishing a governance framework for the use of Generative AI tools and applications, actively monitoring regulatory requirements to ensure compliance, and conducting internal audits and testing of GenAI systems to evaluate performance, security, and reliability.

A robust governance framework is essential for ensuring the responsible and ethical use of Generative AI. This framework typically includes creating clear policies around data usage, model deployment, and accountability. It should outline roles and responsibilities for AI practitioners, data scientists, and business leaders, ensuring that there is a transparent chain of accountability. In addition, the framework should establish protocols for addressing biases in the model, managing sensitive data, and aligning AI use cases with corporate ethics and compliance standards.

To complement these governance efforts, securing AI models against adversarial threats is equally important. Training AI models to defend against adversarial attacks strengthens LLMs in terms of security and robustness. By incorporating adversarial examples during the development phase, organizations can enhance the model’s ability to recognize and classify malicious inputs. This helps models become more resilient to real-world adversarial threats, although regular updates and re-training are necessary to maintain these defenses. When paired with a strong governance framework, adversarial training ensures the model’s reliability and trustworthiness, protecting both the input and output data.

As models evolve and are deployed in critical systems, regular internal audits become a crucial step in maintaining security and compliance. This involves stress-testing AI models for robustness, accuracy, and resilience to adversarial attacks. Auditing should cover the data pipeline, ensuring that data input and output are properly encrypted and protected from breaches. Companies should also test for bias in AI-generated content, especially if it influences decisions related to sensitive areas such as healthcare, finance, or hiring.

Source: Unsplash

However, securing AI systems goes beyond technical measures. Educating the practitioners is essential. For GenAI systems to be deployed responsibly, practitioners must be trained to identify and mitigate risks associated with AI, such as data privacy issues, model bias, and security vulnerabilities. Training programs should focus on equipping AI developers, data scientists, and business teams with the knowledge to detect anomalies or unethical outcomes in the model’s behavior. This may include providing education on privacy-preserving techniques, ongoing workshops, and certifications.

Finally, human oversight remains essential in the responsible use of AI, especially when AI-generated content can directly impact decisions. Despite their sophistication, AI systems can still produce biased or inaccurate outputs.

Ensuring that a human validator reviews all AI-generated content, particularly in high-stakes sectors like healthcare or law, is critical for maintaining accountability, accuracy, and ethical integrity. This human-in-the-loop approach ensures that AI-generated diagnostics or advice are reliable and ethically sound, reducing the risk of harmful or misleading outputs.

Navigating AI regulations in 2024

Data privacy laws such as GDPR and CCPA are designed to ensure that individuals have control over their personal data, requiring companies to obtain consent, provide transparency, and protect the data they collect. However, these laws often lag behind the technology’s rapid advancement. AI has introduced new issues such as bias, ethics, data manipulation, and transparency.

Recognizing these gaps, the European Union has proposed the Artificial Intelligence Act. It categorizes AI applications into four risk levels: unacceptable risk, high risk (e.g., AI in healthcare and finance), limited risk, and minimal risk. High-risk AI systems will face stringent requirements, including mandatory transparency, human oversight, and clear accountability mechanisms to ensure ethical and safe AI use.

In the U.S. there is an initiative such as the Algorithmic Accountability Act, which would require companies to assess AI models on privacy, fairness, and discrimination. According to this, companies are accountable for the outcomes of their AI models, particularly when those models affect people’s lives.

Source: Unsplash

AI systems can be vulnerable to hacking, data breaches, and other adversarial attacks. And AI models security is an ongoing concern. Laws like the Cybersecurity Act in the EU establish standards for AI-related cybersecurity, particularly in critical infrastructure. Ensuring that AI systems comply with cybersecurity regulations is essential for protecting sensitive data and preventing malicious use of AI technologies.

Staying up-to-date with evolving regulations is a critical part of maintaining compliance in AI operations. Companies must actively monitor changes in data privacy laws, AI ethics guidelines, and security standards. Ensuring compliance also includes conducting regular assessments of how AI models handle personal data.

Final words

As the applications of large language models (LLMs) continue to expand across various industries, ensuring the security of these AI systems has become more critical than ever. Prioritizing AI model security is essential not only for responsible usage but also for maintaining compliance with stringent data protection regulations. Securing these systems safeguards sensitive information, prevents misuse, and fosters trust among users.

By partnering with expert providers, businesses can significantly reduce security risks and ensure their AI implementations are aligned with industry standards for compliance. These providers offer the necessary expertise to protect AI models against emerging threats, ensuring that companies can harness the full potential of AI business solutions while maintaining the highest levels of security and ethical responsibility.

FAQ

-

Securing AI models requires specialized knowledge and expertise to ensure that models are protected from vulnerabilities such as data breaches, adversarial attacks, and misuse. A highly effective approach is to partner with a trusted vendor experienced in AI software development.

These vendors have the necessary tools, best practices, and protocols to build, deploy, and maintain AI model security. In addition, the company can go for AI consulting to evaluate their current models for security gaps. Vendors can conduct a comprehensive analysis and provide recommendations.

-

AI plays a vital role in enhancing data security through several advanced techniques. One of its key applications is threat detection, where AI models analyze large datasets in real time to identify potential threats like malware, phishing attempts, or unauthorized access. Anomaly detection is another critical use, as AI can identify irregular network traffic or user behavior that might indicate insider threats or cyberattacks, even when the anomalies are subtle.

Technology also improves user authentication processes, adding an extra layer of security. And last but not least AI enhances encryption techniques, applying sophisticated algorithms to encrypt and decrypt sensitive data, ensuring that only authorized users can access it.

-

Privacy-preserving techniques in AI are methods used to protect sensitive data while still enabling AI systems to perform effectively. These techniques are crucial for businesses that wish to maintain customer trust and comply with regulations like GDPR or CCPA. They include:

- Data anonymization involves removing personally identifiable information from datasets.

- Differential privacy adds noise to the data or the AI model’s outputs in a way that protects individual information while still allowing accurate analysis.

- Federated learning assumes that AI models are trained across decentralized devices or servers using local data, without the data ever leaving the device.

- Homomorphic encryption allows AI systems to perform computations on encrypted data without needing to decrypt it first.