Large Language Model (LLM) Development and Consulting

Large Language Models Use Cases

Gain a competitive advantage in the market by being AI-first with LLM development services.

-

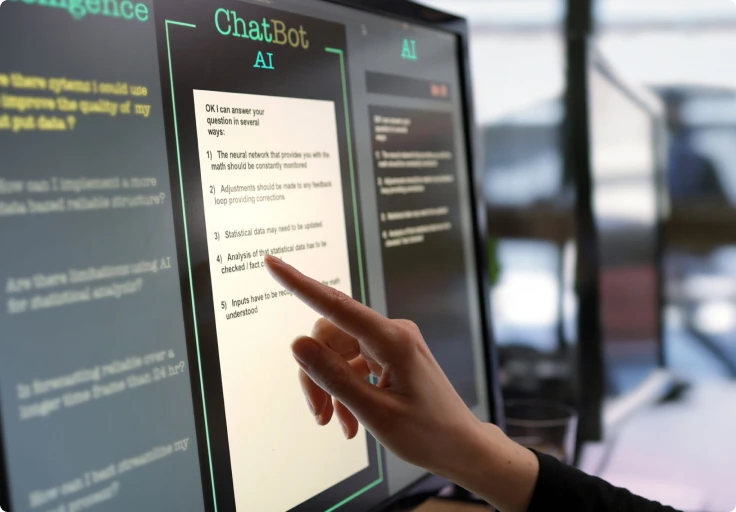

Chatbots and Virtual Assistants

Move from generic bot interactions to personalized messaging, automate upselling, and create edgy, digital avatar experiences that guide your customers through the purchase.

-

Content Generation

Offset the tedium of content creation, generate product descriptions in seconds, and craft coherent and complex text with a human touch for your marketing and sales initiatives.

-

Translation and Language Services

Expand your business reach to multiple geographies, translate and analyze large volumes of business documents, and operate in a global arena with confidence.

-

Personalized Recommendations

Increase sales and customer loyalty by creating a tailored shopping experience that meets the customer's individual needs and iterates on customer data.

-

Text Analysis

Create fluent summaries, analyze large volumes of text data, identify hidden patterns and trends, and hit on business insights that could be useful for decision-making.

-

Educational Tools

Pave the way for interactive and engaging learning, automate the creation of learning materials, and analyze data on student performance at scale.

-

Script Writing

Use LLMs as a creative writing partner, generate starting points for creative concepts and new scripts, and iterate ideas with unmatched speed.

-

-

-

-

-

-

-

Enhance your business with top-notch technology!

-

Chatbots and Virtual AssistantsMove from generic bot interactions to personalized messaging, automate upselling, and create edgy, digital avatar experiences that guide your customers through the purchase.

-

Content GenerationOffset the tedium of content creation, generate product descriptions in seconds, and craft coherent and complex text with a human touch for your marketing and sales initiatives.

-

Translation and Language ServicesExpand your business reach to multiple geographies, translate and analyze large volumes of business documents, and operate in a global arena with confidence.

-

Personalized RecommendationsIncrease sales and customer loyalty by creating a tailored shopping experience that meets the customer's individual needs and iterates on customer data.

-

Text AnalysisCreate fluent summaries, analyze large volumes of text data, identify hidden patterns and trends, and hit on business insights that could be useful for decision-making.

-

Educational ToolsPave the way for interactive and engaging learning, automate the creation of learning materials, and analyze data on student performance at scale.

-

Script WritingUse LLMs as a creative writing partner, generate starting points for creative concepts and new scripts, and iterate ideas with unmatched speed.

Build a Custom LLM Model for Your Industry

Our Expertise in Large Language Model Development

Strategy and Consulting

We help you get a better handle on your business vision and chalk out a step-by-step strategy for the adoption of language models. Our experts define a use case, assess your proprietary data, and provide actionable recommendations on the tech infrastructure during large language model consulting.

- Business case analysis

- Proof of Concept

- Overview of proprietary data

- Project estimation and roadmap

LLM Development

Our engineers build custom LLM models on top of GPT, DALL.E2, and other foundation models and make them a native part of your tech ecosystem. Our NLP, machine learning, and data science experts help tailor the model to your specific business needs.

- User workflow development

- Custom solutions development

- Dataset preparation

- LLM integration

Fine-Tuning

We customize off-the-shelf LLM language models with your data to maximize the value of base models for your business. Our machine learning engineers fine-tune them to your unique business needs, improve accuracy rates, and make the model more efficient.

- Large language model fine-tuning

- API integration

- Data architecture modernization

- Large language model automation

Support and Maintenance

Our support team keeps a close watch on your language learning model, making sure its performance is up to par.

From model optimization to troubleshooting, our generative AI company is there for you 24/7, perfecting, enhancing, and evolving your AI solutions.

- Model monitoring

- Model updates

- Data retraining pipeline

- Risk management and compliance.

Seize the Distinctive Benefits of Large Language Learning Models

-

Increase Revenue

Make your customers feel heard and increase sales with the unlimited potential of LLMs. Custom-built AI software streamlines customer support, generates tailored recommendations, and analyzes your customers, while you can focus on growing your business. -

Reduce Operational Costs

Cut costs by automating tasks that require human labor. From customer experience services to admin tasks, our custom LLM solutions do the heavy lifting of business management and optimize your operations across sales, marketing, customer service, and more. -

Find Growth Opportunities

From sentiment analysis to upselling, a custom large language model unlocks novel use cases for your business based on real-time conversation data. LLMs cast their nets wide to customer data, external market trends, and social media data to power your decision-making. -

Strengthen Your Tech Core

Embed Large Language Learning Models into your applications to ramp up their throughput and enable conversational search.

With LLMs, you can request specific outputs from applications, make the most out of your data, and keep up with increasing workloads.

Integrating Large Language Learning Models, Friction-Free

Our Stack of Large Language Learning Models

Our Broad Expertise Meets Your Needs at Scale

-

Machine Learning

Our developers push the boundaries of generative AI and create innovative solutions with machine learning. Be it predictive analytics or model training, we supplement your models with all the AI features your business needs. -

Natural Language Processing

Drawing on our decade years of experience, we help your applications mine data across formats and platforms to unearth hidden insights. Our developers adapt your sentiment analysis and customer analysis applications where it truly matters. -

Cloud Computing

Our cloud engineers make sure you have the right tech infrastructure and operating model to embrace the benefits of language models and AI. When needed, we perform a full-scale cloud migration or optimize your existing cloud resources. -

Data Engineering

We rethink your business model with data at its core and set the right data practices in place to give you a long-term platform for AI innovation. Build the foundation for change and stay prepared for future transformations.

Why InData Labs?

-

Experience You Can Trust

Over 150 global companies have chosen us to deliver AI solutions at scale – and the results speak for themselves. -

Speed-To-Market

We owe our immaculate delivery record to calibrated processes and a mature product development approach. -

High-Grade Solutions

No matter the challenge, our team of 80+ developers finds an optimal solution that propels your business to new heights.

Trusted by Innovative Companies

Let Our Clients Do the Talking

FAQ

-

A large language model is a type of artificial intelligence that relies on a wide range of NLP, deep learning, and ML algorithms to understand the structure of the language. It is trained on a very large dataset to generate accurate responses and catch up with conversations.

Large language models have been shown to outperform traditional models on a variety of tasks, including machine translation, question answering, and sentiment analysis. Also, unlike traditional chatbots and virtual assistants, LLMs can come in handy for a variety of tasks, including text generation, image captioning, summarization, and other large language models use cases.

-

Large language models examples include the GPT model which is trained on a dataset of 570 GB and fine-tuned for a variety of language tasks, such as translation, summarization, and question-answering. The model is 175 billion parameters in size, which makes it the largest language model ever trained.

Megatron is another example of a large, powerful transformer with 11 billion parameters. Our team also works with OpenLLaMA, StableLM, PaL, and other major conversational AI solutions. We select the right LLM that suits your business needs and workloads.

-

A large language model is created by training a neural network on a large corpus of text. The neural network learns to predict the next word in a sequence, based on the previous words in the sequence. The more parameters the mode has, the more capable it is, and the more training data it needs to score a high accuracy rate.

Unlike traditional AI software, LLMs are general purpose and can be fine-tuned to match the specific needs of a given business. From sentiment analysis to content generation to granular recommendations, language models can support business operations across multiple areas.

-

The cost of developing, training, and deploying a large language model can vary significantly depending on several factors, including the model’s size, complexity, usage, and whether you’re building it in-house or using a cloud-based API. Here’s an overview of the potential costs involved:

- Training сosts

- Hardware and compute сosts

- Data сosts

- Operational costs (Maintenance and fine-tuning).

- Development team costs.

-

The development of a Large Language Model typically involves several key stages, each of which is crucial to building a robust, effective, and scalable model. Below are the primary stages in LLM development:

- Problem definition and requirement gathering

- Data collection and preprocessing

- Model architecture design

- Model training

- Fine-tuning (Optional)

- Evaluation and testing

- Model optimization and compression

- Deployment and integration

- Monitoring and maintenance

- Ethical considerations and bias mitigation.

-

GPT is one of the most popular language models that is based on the combination of NLP, reinforcement learning, neural networks, and other innovative technologies. This ready-made model can be integrated into applications or customized on proprietary datasets through fine-tuning.